I wasn’t aware that changes had been removed before merging. Also, I was intending to not call out the exact PR/individual, but yes that was the PR.

I guess there is also «a specific named thing is a problem, a number of things is a statistical measure» effect. To get an answer when breakages to specific things are removed from a PR, it might help to make requests about the specific things before naming or not naming aggregate counts.

I think that the community ought to consider packaging and pinning as separable tasks with separate workflows.

Correct me if I’m mistaken (?) but I feel that currently there’s an (implicit?) rule/guideline that there should be one version of every package. However this seems to lead to PRs that cause a lot of contention.

For example, take my recent PR bumping pytorch-bin from 1.10.0 to 1.11.0: pytorch-bin: 1.10.0 -> 1.11.0 by rehno-lindeque · Pull Request #164712 · NixOS/nixpkgs · GitHub

I’m an infrequent contributor to nixpkgs, and to be honest when I do contribute I’m kind of slow and can’t keep up with the rapid changes:

- It’s pretty easy for me to contribute new/bump existing packages. E.g. adding

pytorch-bin_1_11_0whenpytorch-bin = pytorch-bin_1_10_0;already exists - But you may not want me to do pinning because:

A. I don’t really know much about some other packageset and how its build systems work. It takes a sizable effort to get up to speed.

B. I’m just never really going to have a huge amount of motivation to dive into the downstream effects of my change that I’ll never experience first hand.

C. Even when I do make the effort to make sure everything keeps building consistently I might not have enough experience to do a good job of testing and knowing what I’m missing.

The current situation seems to me leads to huge PRs which has the following problems:

- long comment threads and lots of context that you have to read through

- much of that context is already stale by the time you read it

- there’s a cadence mismatch between authors, maintainers and reviewers - different people work at different times etc

- large amounts of back-and-forth and hopping between tasks leads to mental fatigue

- nixpkgs itself is a moving target which changes frequently

Instead I’d much rather contribute separate granular PRs for pytorch-bin_1_11_0, torchvision-bin_1_11_0, torchaudio-bin_1_11_0, etc. Then, finally, when all that work is done it’s possible to make a much more focussed effort to roll forward the entire pytorch based ecosystem, involving many more stakeholders.

(I know this “kicks the can down the road” so to speak, and I’m an infrequent contributor as I said. However, I personally think that you eat an elephant one bite at a time.)

Because there are so many people involved that you can’t even ping them in one GitHub comment.

Which only happen because people don’t bump their maintained modules and way to many python packages are not even maintained by the maintainer.

First question is: How long was the update out before it got bumped? If it was out for multiple weeks then you’re not very fast updating your packages. Second: Feel free to do PRs against python-updates and link them in the PR or on matrix. Even a revert is fine if the update is just breaking to much yet and the ecosystem still needs to caught up. Third: For making sure the package still works: Make sure you are executing tests and have a pythonImportsCheck. If the Pypi tarball ships no tests just fetch straight from source. python-updates is being build on hydra and regressions are checked and being taken care of if they affect more than one or two packages.

There are some updates which just need to happen and you need to see what is broken and fix it afterwards. For example when glibc, binutils or gcc is updated. You can’t revert those updates just because some library is broken.

Yes, like any PR should that is not rebuilding 500+ packages. I moved the pkgconfig adoption out of it because based on a quick grep I thought that is not going to rebuild that much which didn’t turned out differently.

of packages that where already broken on master and which I didn’t touch in the PR. We cannot expect people to fix per-existing failures of reverse deps.

Yeah, I moved pkgconfig from buildInputs to nativeBuildInputs because I was in the process of adopting pkgconfig and enabling tests on which where disabled with a comment to enable them soon on the next update over a year ago. First I wanted to fix reverse deps but then I went into a rabbit hole of not very well maintained packages which where broken in multiple ways and gave up.

Yes, because python is limited in that way and having different versions of the same package in the same environment causes massive issues which are hard to detect. e.g. packages end up empty because Python thinks they are already installed which technically they are.

Quick fix if you have an application without reverse deps: Move it out of pythonPackages and use older versions there.

I think this is exactly to @delroth and @rehno’s point though: We need more stability, and those are exactly the kinds of packages that we ought to just have multiple versions of. We (NixOS/cuda-maintainers) have multiple versions of cudaPackages and it works great.

I’ve been waiting for over two years for this day. I can help solve the discoverability, and we make it better than anything pre-flake packages could’ve dreamed of.

I’ve opened up a separate discussion to talk about discoverability

Thats only working because those packages are only used by a very small subset of packages compared to for example pytest. We had multiple versions of pytest for a while and as soon as two different versions mixed everything went downhill with weird and hard to debug errors. Or for I think two weeks we had an empty python3Packages.libcst because I messed the inputs up and no one noticed. If you have a good candidate for a package where we need two versions we can discuss that and see if we can find a solution.

One obvious candidate I can think of now is numpy or tensorflow but that would forbid having a package that anywhere in its dependency tree has any other version of the same package.

If you have a good candidate for a package where we need two versions we can discuss that and see if we can find a solution.

I’d say that, in my case, often as not the final expression I care about is something that can’t be found in nixpkgs at all (because it’s work related, etc). So I can override e.g. pytorch-bin = pytorch-bin_1_11_0 in my private overlay (globally) without ever worrying about everything else that would break in nixpkgs.

(I reckon the majority of people using NixOS have an overlay with overrides like this, even at home… probably?)

If every user has to maintain their own overlay, that kinda defeats the point of nixpkgs… which is sad but also reflective of my user experience.

If every user has to maintain an overlay, that kinda defeats the point of nixpkgs… which is sad but also reflective of my user experience

Oh I don’t know about that, I’m very grateful for all the packages nixpkgs provides me that I don’t need to mess around with. Life was hard before nix!

But again, just to reiterate: for me it’s about more granular PR’s, not the pinning process (which is still very valuable to be clear). The overlay is just my bandaid for when I don’t have time to go fiddle around with unrelated stuff in nixpkgs.

As a heavy user of nixpkg pytorch (thanks btw @rehno), I’m confused on the discussion of why packages can’t be arbitrarily updated or removed. I don’t have the staging experience you guys have (@delroth , @samuela) and to be clear; maintainer involvement/pinging, modularity, the PR backlog, and discovering not-broken versions of packages all are obviously serious problems to me.

I thought the point was Nix never promised working builds, just reproducibility. Even nixpkg unstable is a misnomer since its not like the git history is being rewritten. Updates break things; on Debian, on MacOS, on Arch, and I’m pretty sure npm and pip don’t even allow updates to be published until they break at least 5 downstream projects.

@rehno I’ve got a project using torch_1_8_1, cuda-enabled torch_1_9_0, and torch_1_9_1 using overlays all in the same file. When I was upgrading 1_9_1 was broken… and I just went and found another version that wasn’t. If nixpkg.torch randomly changed to 1_11_0 and broke everything, I wouldn’t even know it for 2 years probably. Finding a working version might be an open problem, but I’m never irritated when an update goes poorly, I expect it to go poorly.

What would be nice is if, when a package build failed, there was a link to a repo that had only the package in it so I could fork the repo, patch it, use the patch, and ping the maintainer with a PR. Just like any other node/python/deno/rust/ruby/elixr/crystal/haskell/vs-code/atom package

Sandro, before going into specifics on your reply, I’d just like to state what might be obvious: while I personally think there are issues and possible improvements to be made to the workflow that is currently being used, I’m still extremely grateful for your work (and FRidh’s, and many others’s). This is a really hard problem to tackle and the current (imo) flawed process is still better than nothing in many ways.

That sounds like some tooling improvements are in order. Why can’t Hydra send the maintainer(s) of a derivation a breakage notification when a failure happens on certain designated “important” branches (master, staging-next, release branches)? All the data should already be available for that.

This is a bit curious to me and goes against my assumptions. Is there any reason why Python modules would be less maintained (by their listed maintainer) than other derivations in nixpkgs? If they are less maintained, any idea why? If they are similarly well maintained as the rest of nixpkgs, any reason why we have a higher standard for freshness? We don’t do these mechanical bulk bumps for e.g. all GNU packages or other sets you could think about.

As a side note, sometimes there are good reasons to keep packages behind for a short time. We have a bunch of modules that mainly see use in nixpkgs because they’re home-assistant dependencies. HA is super finicky with its version requirements, it’s a pain, and I know we tend to batch updates along with HA version bumps to avoid too much breakage. Maintainers are more likely to be aware of that than a bulk update script and/or a reviewer without context. A recent-ish example I remember there is this pyatmo version bump which was merged before I (maintainer) had any chance to say a word on the PR (5h from PR creation to merge).

I try to be fairly responsive. I built my own tooling to send myself notifications when repology shows my maintained packages as out of date, which frankly sounds like something we ought to have as a standard opt-in - if not opt-out - tool for nixpkgs. I do think there are situations when maintainers are unresponsive. I don’t think it’s reasonable to assume that’s the default situation.

I unfortunately don’t think this is realistic for most maintainers. nixpkgs work is something I do on the side when I have time after my actual full time work, I can’t spend that time trying to find all the changes that might be happening throughout the project and might be impacting my work. I suspect this is the case for many maintainers. Notifications are a different thing though, they are directly actionable for me and would point me directly at what to fix.

Ideally, once python-updates or staging or some of these “wide impact branches” gets into a mostly stable state (like, most things work, only a few % of breakages remain), we’d flip a switch somewhere that sends notifications to all maintainers saying “we’re planning to merge this in X days, the following derivations that you maintain are broken by these large scale changes, please take action by coordinating fixes on PR #nnnnn and sending fixes on the yyyyyyy branch”.

This is still reactive vs. proactive, but we apply the shift-left principle: maintainers are told about the breakages much earlier giving them more time to act before most users are impacted, and potentially finding bugs before the branch is merged (more eyes on the work!).

FWIW, I don’t have a strong opinion on whether maintaining multiple versions is a good solution or just creating more maintenance problems. My gut feeling is towards the latter, but I didn’t spend much time thinking about this.

PS: I would have replied earlier, but I was spending my time yesterday fixing a derivation build that got broken by the last set of Python updates… flexget: unbreak by adding some more explicit dependencies by delroth · Pull Request #170106 · NixOS/nixpkgs · GitHub

I don’t know why there would be less support, but I can say it wasn’t until I started using nix that I realized how much system-baggage python modules have/assume. Almost no major python module is actually written with just python; numpy, opencv, matplotlib, even the stupid cli-progress bar tqdm (that claims it has no dependencies!) still hooks into tkinter, keras, and matplotlib and causes problems because of it. Python modules cause me the most pain of anything I’ve setup in nix, and I’ve compiled electron apps in nix-shell.

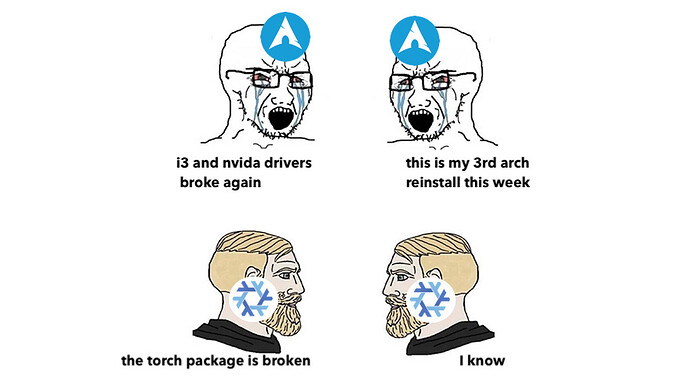

Literally this morning with my robotics group we found import torch; import numpy; causes a segfault but changing the order to import numpy; import torch works fine, whole program runs, torch works great. Its an absolute house of cards.

One way to think about it is this:

Every package bump that involves transitive dependencies is a little mini release ceremony. However it’s all on one person to make it all the way through to the very end before running out of steam:

- Update your package + dependencies of your package

- Fix the reverse dependencies of your dependencies (excluding your package)

- Fix the dependencies of those reverse dependencies

- Fix the reverse dependencies of those dependencies, and so forth…

My proposal is essentially to leave some packages (completely?) disconnected from the dependency graph until enough of them has been updated before taking that big stride forward.

Potentially if people start connecting up the graph prematurely, maybe it will cause problems? But I think the idea would be to keep hydra “evergreen” so to speak, so that things keep building, but if the top-level/* pins haven’t been rolled forward yet, you have to pin on your own (in your own overlay).

We already do that for hypothesis (usually we are 2 to 4 versions behind), pytest, setuptools and probably some more which can cause breakges in almost every package. Maybe we need to formalize this list and add more things to it. Also we should probably add more passthru packages to test to important downstream users. I encourage anyone that has good examples to send PRs.

Also feel free to ping me if you get stuck trying to debug some python failure but only if you have a complete log. I don’t have time to dig deeper into issues when I am on the go but I can usually take a quick look at a log and drop some ideas what might be causing this or where to dig more.

@hexa can we somehow do this? This sounds like something which could improve the situation for everyone drastically. Maybe a notification channel that people can subscribe to?

Nothing we can really fix to be honest.

Like Debian, we can’t have every version and every variant of a software. We can make exceptions like for example for ncdu because version 2 does not yet work on darwin.

Probably but the majority of people on every other distro doing dev work are also using some software from somewhere else. We can’t possible satisfy the needs for everyone.

For example I have many overlays where I draw in patches from unmerged PRs or update to a development version of a software. It wouldn’t really work if those where in nixpkgs.

No it doesn’t. If you have very special and very customized needs you can very easily adopt nixpkgs to be working for you. If there are overlays which a big majority of people need to use we need to think about how to upstream them but we can’t make nixpkgs work for every situation for everyone.

Do you and all the others have any overlays on their system right now which really should be upstreamed? Please share them so we can talk about them and hopefully find better answers to the problems than some program needs to be updated to a new version of X.

That are literally overlays where you can just copy the contents of an .nix file into with a few lines of boiler plate code to get things working quickly again. I am often doing that if I really don’t have time and need things to get back working fast.

There are actually email notifications which where disabled because they send way to many emails which all quickly landed in spam. The feature could maybe be resurrected to be opt-in.

No, maybe to many packages? Maybe people got used to that they do not need to maintain them that well.

I don’t think we can really compare python to gnu libraries. A comparable eco system would be node/npm/etc., ruby, go, haskell or rust. Go and Rust mostly use vendored dependencies where this issue does not apply to but others like packages not getting CVEs patched. Ruby in nixpkgs is an order of magnitude smaller than python. And finally node which has even bigger auto updates and is not even recursed into because it is to big. Haskell in nixpkgs is kinda similar to python with big auto updates which multiple times already broken hadolint for me. Only difference I know is that haskell packages better follow semver.

Home-Assistant is an ende user program which allows us to override anything it requires to a known-working version. Something we cannot easily do in pythonPackages. Also many of its dependencies are not widely used in nixpkgs.

Is that open source? Can we have a link to it?

Ruby has the Gemfile.lock standard which allows vendoring via bundix, so you mostly only need to put non-ruby dependency fixes into nixpkgs. So I think it is somewhat comparable to Go/Rust. Except no one has figured out how to hash the Gemfile.lock/bundix’s gemset.nix to avoid adding it to nixpkgs for applications.

I’ve been working on getting us merge trains, but we’re still not there with GitHub.

Reading all well put feedback here it seems that for staging merges we’d like to compile a list of broken packages compared to master and ping all maintainers.

Anyone that’s up for writing such a script?

Quick concrete suggestion that could help with “versioning” workflow via overlays: Flake inputs should be able to do a sparse checkout of a Git repository · Issue #5811 · NixOS/nix · GitHub

Compared to my current workflow which involves painfully updating hashes:

[RFC 0109] Nixpkgs Generated Code Policy by Ericson2314 · Pull Request #109 · NixOS/rfcs · GitHub I think would help with these things. I guess we might need more shepherds to unblock things?

Our community is in limbo as in waste-of-time projects like Flakes suck all the energy out of the room yet there is little governance mechanism to coordinate tackling the problems Nixpkgs faces in bold, innovative ways.

We have these conversations year after year, but nothing happens than various tireless contributors work harder and harder.

The only way to make things more sustainable is

- to improve the techonology Nixpkgs uses

- to work with upstream communities to tackle the social problems together.

If we have fixed governance, we can actually tackle these issues. But right now, we cannot.

Our community is in limbo as in waste-of-time projects like Flakes suck all the energy out of the room

Characterizing flakes as a waste of time while at the same time bringing up your pet project RFC 109 (which won’t actually solve the issues brought up in this discussion) seems rather odd.

Flakes do in principle allow us to reduce the scope of Nixpkgs, by moving parts of Nixpkgs into separate projects. E.g. a lot of “leaf” packages and NixOS modules could be moved into their own flakes pretty easily. But it’s important to understand that this does not solve the integration/testing problem - it just makes it somebody else’s problem.