Hi everyone,

I’ve been working on a tool called RepX, a toy project I have been using for the last couple of months. It’s a framework for defining and running high-performance computing (HPC) experiments, effectively serving as a Nix-based alternative to the Guix Workflow Language (GWL).

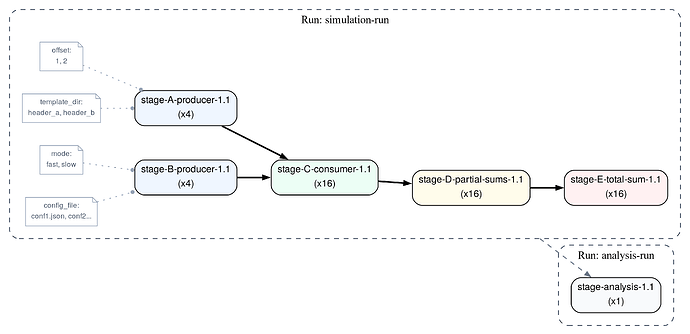

The core philosophy is to decouple the definition of an experiment from its execution. You define the entire topology and software environment as a Nix flake, build it locally into a portable “Lab” artifact, and then ship that artifact to a cluster for execution.

The Repository: GitHub - repx-org/repx: A framework for defining, building, and executing reproducible HPC experiments using Nix.

The Build vs. Runtime Separation

The main friction point RepX solves is running Nix-defined workflows on HPC clusters where you cannot install Nix.

- Build Time: You need Nix. You define pipelines, parameter sweeps, and dependencies using

repx-nix. This produces a closure containing everything your experiment needs. - Run Time: You do not need Nix. You only need a Linux kernel with User Namespaces support. The

repx-runner(written in Rust) handles syncing the closure and executing jobs inside isolated containers (using Bubblewrap or Podman) derived from that closure. It supports content addressable caching and slurm scheduler.

Comparison

| Feature | RepX | Guix Workflow Language (GWL) | Snakemake / Nextflow |

|---|---|---|---|

| Definition Language | Nix | Guile Scheme | Python / Groovy (DSL) |

| Dependency Management | Functional (Nix Store) | Functional (Guix Store) | External (Conda, Docker, Modules) |

| Build Requirements | Nix | Guix | Language Runtime (Python/Java) |

| Runtime Requirements | Kernel w/ User Namespaces | Guix Daemon (usually) | Conda/Singularity/Docker installed on cluster |

| Execution Model | Ship closure & orchestrate remotely | Local execution or offloading | Workflow engine runs on cluster/node |

All feedback is welcome.