TL;DR: I have successfully installed NixOS to the eMMC storage of my aarch64 machine, but installing it to the NVMe is proving difficult.

Any help would be appreciated ![]()

I have an aarch64 machine that has NixOS installed on it, on its eMMC drive to be precise. The machine also has 4 NVMe drives that back a ZFS zpool, which looks like this:

❯ zfs list

NAME USED AVAIL REFER MOUNTPOINT

rpool 3.13G 10.7T 140K none

rpool/data 2.00M 10.7T 256K none

rpool/home 140K 10.7T 140K legacy

rpool/nixos 3.13G 10.7T 3.13G legacy

What I’d like to is reinstall NixOS on the rpool/home and rpool/nixos datasets, which are meant to host the user home directories and NixOS filesystem root, respectively. I want to do this because ZFS backed by the NVMe SSDs is likely to be a lot more durable than the eMMC drive.

I mount the datasets, and the /boot-disk-to-be, with the following:

MNT="$(mktemp -d)"

❯ sudo mount rpool/nixos $MNT

❯ sudo mount rpool/home $MNT/home

❯ sudo mount /dev/disk/by-uuid/0467-D8D4 $MNT/boot

Finally, I install with

❯ cd ~/host-config-flakes/cumulus && \

nix flake update && \

sudo nixos-install --root "${MNT}" --flake .#cumulus

warning: Git tree '/home/j/host-config-flakes' is dirty

warning: Git tree '/home/j/host-config-flakes' is dirty

building the flake in git+file:///home/j/host-config-flakes?dir=cumulus...

warning: Git tree '/home/j/host-config-flakes' is dirty

installing the boot loader...

setting up /etc...

Copied "/nix/store/9kv0gfbf8gr2dfndqyx26nkv3s486l5j-systemd-257.2/lib/systemd/boot/efi/systemd-bootaa64.efi" to "/boot/EFI/systemd/systemd-bootaa64.efi".

Copied "/nix/store/9kv0gfbf8gr2dfndqyx26nkv3s486l5j-systemd-257.2/lib/systemd/boot/efi/systemd-bootaa64.efi" to "/boot/EFI/BOOT/BOOTAA64.EFI".

⚠️ Mount point '/boot' which backs the random seed file is world accessible, which is a security hole! ⚠️

⚠️ Random seed file '/boot/loader/random-seed' is world accessible, which is a security hole! ⚠️

Random seed file /boot/loader/random-seed successfully refreshed (32 bytes).

Created EFI boot entry "Linux Boot Manager".

setting up /etc...

setting up /etc...

setting root password...

New password:

Retype new password:

passwd: password updated successfully

installation finished!

So the install (including setting up the boot loader) ostensibly goes fine, without any visible errors.

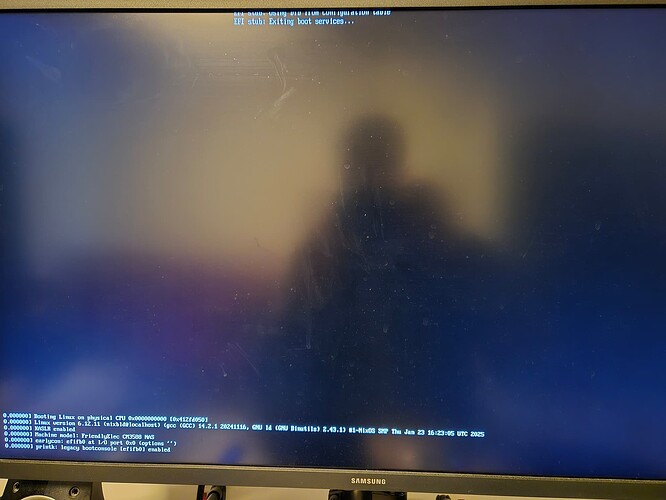

I can also look at $MNT/ and see the file system structure created there. And when I try to boot the newly installed NixOS version, the boot menu will appear and I can select the latest NixOS generation from it, and it’ll appear to boot before blanking the screen (which in and of itself is expected behavior, no HDMI output support at this time).

The problem though is that I’m supposed to be able to ssh into the machine, and I cannot.

The only difference between the configurations of 2 installs should be the 2 fileSystems blocks (1 block for eMMC, and 1 for ZFS + NVMe) in hardware.nix. And yet one works, the other doesn’t. So perhaps something is wrong with the way I configured NixOS to run on ZFS?

For completeness, here are my system config files:

# flake.nix

{

description = "NixOS Configuration Flake (cumulus)";

inputs = {

# NixOS official package source, using the `nixos-unstable` branch

nixpkgs.url = "github:NixOS/nixpkgs/nixos-unstable";

home-manager.url = "github:nix-community/home-manager";

home-manager.inputs.nixpkgs.follows = "nixpkgs";

# wezterm.url = "github:wez/wezterm/main?dir=nix";

};

outputs = inputs@{ self, nixpkgs, home-manager, ... }: {

nixosConfigurations.cumulus = nixpkgs.lib.nixosSystem {

system = "aarch64-linux";

modules = [

# Import the old config file so it will still take effect

./configuration.nix

home-manager.nixosModules.home-manager

{

home-manager.useGlobalPkgs = true;

home-manager.useUserPackages = true;

home-manager.users.j = import ./home.nix;

# Optionally, use `home-manager.extraSpecialArgs`

# to pass arguments to ./home.nix

}

];

};

};

}

# configuration.nix

{ config, lib, pkgs, ... }:

{

imports = [ ./hardware.nix ];

boot = {

kernelParams = [ "earlycon=efifb" "boot=zfs" ];

loader = {

efi.canTouchEfiVariables = true;

systemd-boot = {

enable = true;

configurationLimit = 16;

};

};

};

boot.supportedFilesystems.zfs = true;

boot.zfs.forceImportRoot = true;

boot.initrd.systemd.enable = true;

boot.initrd.supportedFilesystems.zfs = true;

# services.zfs = {

# autoScrub.enable = true;

# autoSnapshot.enable = true;

# };

networking.hostId = "54e492c4"; # See https://nixos.org/manual/nixos/unstable/options#opt-networking.hostId

hardware = {

enableRedistributableFirmware = true;

deviceTree = {

enable = true;

name = "rockchip/rk3588-friendlyelec-cm3588-nas.dtb";

};

};

# Set your time zone.

time.timeZone = "Europe/Amsterdam";

# Select internationalisation properties.

i18n.defaultLocale = "en_US.UTF-8";

# Enable the X11 windowing system.

# services.xserver.enable = true;

# Configure keymap in X11

# services.xserver.xkb.layout = "us";

# services.xserver.xkb.options = "eurosign:e,caps:escape";

# Enable CUPS to print documents.

# services.printing.enable = true;

# Enable sound.

# hardware.pulseaudio.enable = true;

# OR

# services.pipewire = {

# enable = true;

# pulse.enable = true;

# };

# Enable touchpad support (enabled default in most desktopManager).

# services.libinput.enable = true;

# Define a user account. Don't forget to set a password with ‘passwd’.

users.users.j = {

isNormalUser = true;

initialPassword = "<SOME PASSWORD>";

description = "j";

extraGroups = [ "networkmanager" "wheel" "zmount" ];

shell = pkgs.zsh;

openssh.authorizedKeys.keys = [

# SNIP

];

packages = with pkgs; [];

};

users.users.git = {

isNormalUser = true;

description = "A user account to manage & allow access to git repositories";

extraGroups = [ "git" "networkmanager" ];

shell = "${pkgs.git}/bin/git-shell";

openssh.authorizedKeys.keys = [

# SNIP

];

packages = with pkgs; [];

};

users.groups.zmount = {}; # used for mounting ZFS datasets

users.defaultUserShell = pkgs.zsh;

nix.settings.experimental-features = ["nix-command" "flakes"];

# Allow unfree packages

nixpkgs.config.allowUnfree = true;

programs.zsh.enable = true;

programs.zsh.ohMyZsh = {

enable = true;

plugins = [

"git"

"git-prompt"

"man"

"rsync"

"rust"

"ssh-agent"

];

theme = "agnoster";

};

programs.zsh.shellInit = ''

# Load SSH key

# eval "$(ssh-agent -s)" > /dev/null

# ssh-add ~/.ssh/id_ed25519.cumulus 2> /dev/null

# echo "🪐loaded system ZSH configuration🪐"

'';

programs.zsh.shellAliases = { # system-wide

l = "eza -ahlg"; # list

lT = "eza -hlgT"; # tree

};

# List packages installed in system profile. To search, run:

# $ nix search wget

environment.systemPackages = with pkgs; [

bat

bottom

cifs-utils

samba

curl

emacs

eza # nicer replacement for `ls`

fd # An alternative for `find`

git

lm_sensors

neofetch

nix-tree

ripgrep

rsync

tokei

vim

];

# Some programs need SUID wrappers, can be configured further or are

# started in user sessions.

# programs.mtr.enable = true;

# programs.gnupg.agent = {

# enable = true;

# enableSSHSupport = true;

# };

services = {

openssh = {

enable = true;

settings = {

KbdInteractiveAuthentication = true;

PasswordAuthentication = true;

PermitRootLogin = "yes";

};

};

};

networking = {

dhcpcd.enable = false;

firewall.enable = false;

hostName = "cumulus";

useDHCP = false;

useNetworkd = true;

hosts = {

# SNIP

};

};

systemd = {

network = {

enable = true;

networks = {

enP4p65s0 = {

address = [ "192.168.1.145/24" ];

matchConfig = { Name = "enP4p65s0"; Type = "ether"; };

gateway = [ "192.168.1.1" ];

};

};

};

services.internetAccess = {

wantedBy = [

# "multi-user.target"

];

after = [

"network.target"

"multi-user.target"

];

description = "Add internet access.";

path = [pkgs.bash pkgs.iproute2];

script = ''

ip route add default via 192.168.1.1 dev enP4p65s0

'';

serviceConfig = {

Type = "oneshot";

User = "root";

Restart = "no";

};

};

};

# Open ports in the firewall.

# networking.firewall.allowedTCPPorts = [ ... ];

# networking.firewall.allowedUDPPorts = [ ... ];

# Or disable the firewall altogether.

# networking.firewall.enable = false;

# Copy the NixOS configuration file and link it from the resulting system

# (/run/current-system/configuration.nix). This is useful in case you

# accidentally delete configuration.nix.

# system.copySystemConfiguration = true;

# This option defines the first version of NixOS you have installed on this

# particular machine, and is used to maintain compatibility with application

# data (e.g. databases) created on older NixOS versions.

#

# Most users should NEVER change this value after the initial install, for

# any reason, even if you've upgraded your system to a new NixOS release.

#

# This value does NOT affect the Nixpkgs version your packages and OS

# are pulled from, so changing it will NOT upgrade your system - see

# https://nixos.org/manual/nixos/stable/#sec-upgrading for how to

# actually do that.

#

# This value being lower than the current NixOS release does NOT

# mean your system is out of date, out of support, or vulnerable.

#

# Do NOT change this value unless you have manually inspected all

# the changes it would make to your configuration, and migrated

# your data accordingly.

#

# For more information, see `man configuration.nix` or

# https://nixos.org/manual/nixos/stable/options#opt-system.stateVersion .

system.stateVersion = "24.11"; # Did you read the comment?

}

# hardware.nix

{ config, lib, pkgs, modulesPath, ... }:

{

imports = [

(modulesPath + "/installer/scan/not-detected.nix")

];

boot.initrd.availableKernelModules = [ "nvme" "usbhid" ];

boot.initrd.kernelModules = [ "zfs" ];

boot.kernelModules = [ ];

boot.extraModulePackages = [ ];

# Installing NixOS using these works:

# fileSystems."/" = { # eMMC

# device = "/dev/disk/by-id/mmc-A3A564_0xe6596dd2-part3";

# fsType = "ext4";

# # options = [ "lazytime" ];

# };

# fileSystems."/boot" = { # eMMC

# device = "/dev/disk/by-id/mmc-A3A564_0xe6596dd2-part2";

# fsType = "vfat";

# # options = [ "umask=0077" "fmask=0077" "dmask=0077" ];

# };

# But installing NixOS using these *FAILS*:

fileSystems."/" = {

device = "rpool/nixos";

fsType = "zfs";

};

fileSystems."/home" = {

device = "rpool/home";

fsType = "zfs";

};

fileSystems."/boot" = {

device = "/dev/disk/by-uuid/0467-D8D4"; # points to /dev/nvme0n1p1

fsType = "vfat";

options = [ "fmask=0077" "dmask=0077" ];

};

swapDevices = [ ];

# Enables DHCP on each ethernet and wireless interface. In case of scripted

# networking (the default) this is the recommended approach. When using

# systemd-networkd it's still possible to use this option, but it's

# recommended to use it in conjunction with explicit per-interface

# declarations with `networking.interfaces.<interface>.useDHCP`.

## networking.useDHCP = lib.mkDefault true;

# networking.interfaces.eth0.useDHCP = lib.mkDefault true;

# networking.interfaces.enP4p65s0.useDHCP = lib.mkDefault true;

nixpkgs.hostPlatform = lib.mkDefault "aarch64-linux";

powerManagement.cpuFreqGovernor = lib.mkDefault "powersave";

}