This post was flagged by the community and is temporarily hidden.

I used to be able to click “View content” and still see posts like this, now I can’t.

Is this an intended change or just new default for discourse?

This post was flagged by the community and is temporarily hidden.

I used to be able to click “View content” and still see posts like this, now I can’t.

Is this an intended change or just new default for discourse?

This was brought by an update.

I can’t find it in changelogs to provide a ref, but DEV: Only allow expanding hidden posts for author and staff by Drenmi · Pull Request #21052 · discourse/discourse · GitHub and The persistence of posts hidden by flags are a bane to our community - UX - Discourse Meta seems to match the behavior we have.

Hidden posts are now configured by default to be visible only by users of Trust Level 4, which is attainable only by being promoted to it.

That being said, we are still discussing that setting, but right now we are reluctant to make hidden posts available to a wide audience again.

I’d vote for letting people see hidden replies, for context alone. In some of the recent posts around diversity in the community, several replies were hidden, but also received a lot of replies in the thread. If that original reply gets hidden, a reader of the thread will be unable to understand the context of the replies.

Reading the Discourse documentation, it seems like admins can tie hidden post visibility to groups, which means that it seems possible for it to be opt-in as a meet in the middle kind of solution, if there’s a publicly joinable group without publicly visible members (docs from meta.discourse.org). I might be misunderstanding how one of the cogs in the Discourse machine works here, but seemed like another option that was worth mentioning.

I think we will keep the current setting, it has some upsides for moderation which makes life a bit easier for us:

Maybe there will be some complications down the road, but for now I would like to try this out, since I’m quite optimistic that these changes will improve discussions. We can take a look next year if we need to do some changes to those settings after we have some experience/data with it ![]()

This does put greater pressure on the moderation team to confirm/deny community flagging on a timely basis. Due to the way Discourse keeps track, this means that persistent false and incorrect flagging ends up penalized. This could improve the quality and reliability of that flagging from the community.

The system is described in Managing user reputation and flag priorities - Site Management - Discourse Meta . Assuming the system is still somewhat the same, it is important to for people to understand that the agree/disagree/ignored percentages will impact their ability to flag. We should also ensure that the ignored percentage is not too high if we want the system to be trusted and to allow fully hiding flagged posts by default.

Many controversial comments will be flagged by many but liked by many.

It is in the moderators’ interest to let controversy die down but it is simply silencing a lot of people’s opinions for the ease of moderating the topic.

This is not a good direction to go down. Whoever has gotten their message flagged will get lot of dms asking for what they posted by curious users or they themselves start posting on social media platforms and intentionally or unintentionally rile people up.

This ensures that all the issues fester outside while keeping the discourse clean, but you still have the same riled up people ready to explode or even leave at the slightest change.

The flag feature can be easily abused by many now. It’s a very powerful tool for silencing anything that you don’t like.

Previously it was not silencing, just dismissing it.

The year long experiment might prove to be successful but at what cost? Just to convenience a few moderators while losing tons of opportunities to change people’s minds by letting them see the opposing views, for a whole year.

Now this restricts the whole of discourse to a official tech help and announcements channel and an echo chamber of positivity. But the unofficial spaces will see an uptick in discussions people who are simply not happy.

I don’t see how this is a good thing compared to what we have now.

The flagging feature is essentially a delegation of power from moderators to the community. For this to succeed, moderator review of flagging is critical. Let’s participate. Let’s flag some content (assuming there will be some due a flagging) and see:

It may work, or it may prompt criticism toward moderators. I hope it will be the former, but the latter can also contribute to improvement.

Sorry for the long read, no time for editing. TLDR is I’m yet again equivocating and wishing for a technical solution to a social problem

I was initially confused and annoyed by the change: for a moment I wasn’t sure if my browser isn’t just broken, and for another I lamented we’re not using a platform that would help me just replicate messages for my own reading. It also didn’t help that the change came unannounced, but who’s here paid to read discourse changelogs?..

Regardless, now I had some time to reflect on this. I tried skimming some explanations of how discourse flagging works[1][2] (I have not read the full posts) to see if e.g. “flags” can negatively impact a user’s ratings (“trust level” and authorizations), which I think in most cases they do not. I think this system could be useful. However, I think we’d have to adjust our attitudes to what being flagged means, and maybe even document how we hope the tool would be used.

I currently have a biased and limited understanding of “moderation”. I like to believe discourse peers generally have thoughts they try to communicate, opinions to convince each other of. I think communication tends to fail, i.e. the message as interpreted by the reader falls far from the original thought encoded by the writer. Ultimately, I think communication fails as a rule because it necessarily must. I’m convinced that the path towards a shared understanding is always narrow and deeply personal: an explanation that works for one audience fails with another. This also happens because people assume and leave out context, because they really speak different evolutions of ostensibly the same language, because their writing is emotionally loaded, because the reader is emotional, because peers grew up each in their own history, and sometimes because “history” is what the reader and the writer have in common. Because it’s just hard to deal with the fact that your own and your peer’s values may be fundamentally incompatible. When these errors occur they accumulate. They further widen the gaps between each two peers’ perceptions of the same situation, leading to even larger errors and “derailing” the conversation. To summarize, each time you make an argument you may expect it to fail regardless of whether you’re “right” or “wrong” on the technical matter, but only because you just haven’t found the path[3] to relay thoughts to the particular party.

When communicating in person we rely on mimics and intonations to serve as an error detection mechanism. In the textual media there’s no universally adopted way to send “acknowledgements”, nor are we always wary of when the other party ends up on a different page than ourselves. I know I’m not. To be very concrete, there’s no “button” to say: “whatever you were trying to say went past me because I’m just hurt by the way you said it or by the accompanying detail”. “Flags” could be that.

The mode of operation I would be interested to see in action is that a hidden post should mean “this didn’t work” and “your message won’t be perceived until you’ve found a genuine new way to encode it”. With this approach, “flags”, considered in isolation, would have to be disassociated from “guilt” and “reputation”. A moderator reviewing the flagged message wouldn’t judge about what is or isn’t “offensive” in an abstract and absolute sense, because the flag wouldn’t be an absolute but a personal statement. When any person says they’re disturbed by a conversation they clearly are the best judge for their own feelings. The moderator’s job is then the opposite of how many might perceive it now, namely it would be to decide when an offending message is sufficiently important to warrant un-hiding it

It surely seems like an overreach to hide a message for everyone, not just the offended. That said I can see how this can be efficient at cutting a thread of conversation off before it spirals out to cause damage for no gain. The attitude that could help here is the same as with the email piling up during a long leave: “if it’s important they’ll write again”. We’d have to agree that when your message is hidden it’s not that you’re no longer “allowed” to express your opinion, it’s that if you care you should try again and do find what went wrong last time.

We’d have to find the balance between silencing each other down so much there’s no communication happening at all on one hand, and speaking past each other on the other.

I must acknowledge this system would not favour the vulnerable reporting offense, but maybe we just need different channels for that, which was already brought up elsewhere

Understanding post flags in Discourse - #18 by codinghorror - Using Discourse - Discourse Meta ↩︎

For example, personal attacks normally aren’t the path; repeating the same message in multiple threads regardless of what other people are saying doesn’t seem to convince anyone of anything either ↩︎

Those are interesting thoughts but you seem to be modeling this space as primarily about one-to-one communication. I would argue that, as a public forum, this is primarily about one-to-many communication—an even more complicated affair! This post I’m writing is addressed to you but is intended to be read by any registered user here, as well as unregistered users from the wider internet. And the content of this post reflects not only on me personally but on the NixOS community at large, and in particular it reflects on the communication norms in place in this forum. I could do reputational harm to the project by writing unwisely here, and direct, practical harm by driving people away with my words. That is the risk that official moderation and community moderation (flags) exist to mitigate.

The purpose of flags is not to send any sort of message back to the communicator—we have direct messages for that, and direct messages can be very effective in correcting a miscommunication when both parties are willing. The purpose of flags is to assist the official moderation team in curating the words that represent our community to the world at large, which they do with the goal of fostering a culture that is consistent with our stated values. I don’t think we should propose other potential meanings or connotations for flags, because they would detract from this purpose.

I would say that, right now, DMs are that button, among other purposes. If you want more nuanced post reactions, maybe there’s a Discourse plugin for that, and I wouldn’t be opposed to talking about enabling such a thing. But no, flags are not a good substitute for this, and using them as such would only make moderation more difficult.

Please not even more silencing.

I want to have the option to see what people have to say.

How shall I trust that a flagging system is working well when I can’t even see the content?

It also discriminates against people that have less time to spend on Discourse and check in less frequently: They see less than users that read posts “early enough” before they get flagged.

If this is not possible to restore or change via a setting, can anybody please set up or recommend a crawler software (or service) that mirrors the Discourse, like archive.org but faster?

We relaxed the hidden posts hiding a bit, they can now be read by users with a high enough trust level (TL3). Which can be obtained by engaging with discourse in helpful ways.

@nh2 maybe the mailing list mode is what you are looking for? discourse supports sending emails for subscribed categories/topics. But mind that engaging with hidden posts is not encouraged and could be seen as throwing fuel into the fire, depending on the situation ![]()

How many users currently meet the threshold for TL3? The default looks very hard to meet and maintain from what I read here.

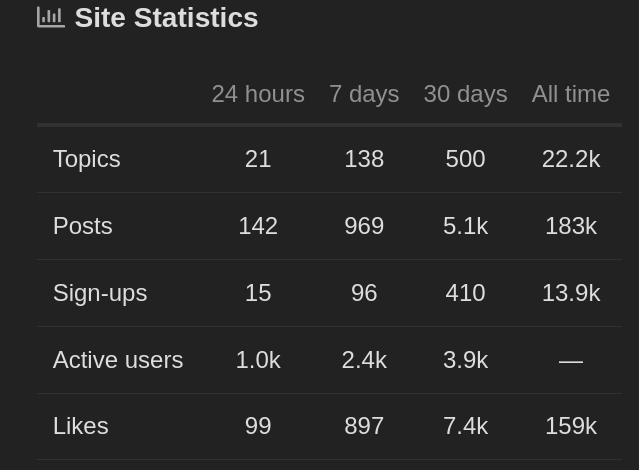

currently we have 41 members with TL3 and 16 with TL4

Indeed TL3 requires:

That seems almost impossible to achieve for people that have a job, family, or prefer to spend their time contributing code to nixpkgs instead of scrolling discourse for leasure.

Also, reading 25% of NixOS topics requires extremely … widely spread interest. I use NixOS for work for ~10 years for all our infrastructure and code builds, and am committer, but there’s no way even 10% of topics would be relevant to me.

Most top NixOS contributors I checked do not pass this bar, including (apparently) the mods on this thread.

So this doesn’t seem like a good solution to me.

One can see anyone’s trust level in the profile (e.g. https://discourse.nixos.org/u/nh2/summary). For the own profile, click the Expand button there.

I think that if the community wants to signal a behaviour is not acceptable, hiding by default a post is more than enough. No matter how you spin it, allowing only some elite users to see the content is censorship and this shouldn’t have a place in a free software community.

There are already a lot of people that have little trust in the leadership of the project and making things more opaque is not going to help.

I honestly don’t understand this obsession with being able to view hidden posts. Like, if they were good posts and on-topic, they wouldn’t be hidden in the first place. Everybody interested in following a discussion in its entirety can still read everything by enabling mail notifications. There is a sufficiently large set of people (~50) who can still view hidden posts, and thus the moderation team is implicitly accountable to them in their decisions.

That’s alright, it’s not really necessary for everyone to understand everyone. As long as there’s some interest in accessing the content unedited, and there’s means to satisfy it (in this case the email mode, which I’m now trying out, thanks to the hints in this thread), we might as well skip the justifications

P.S. I appreciate rhendric’s reply, we since exchanged one or two DMs to better understand our premises, and I’m slowly working out a follow up (and reflecting whether one is actually needed)

https://discourse.nixos.org/about

50 people are not 4k people (monthly active users), and there’s the “unofficial” spaces.

As for whether 50 people are enough to keep moderators accountable, I doubt it very much, given the number of posts, and how much each person want to be part-time moderator moderating moderators.

mail notifications

there is this thing called future, when people who are interested in accountability, i.e. the 50 people, go back to their inbox to find nothing in their emails, because they deleted them.

If not and they should start keeping track of these emails, then why not add 50 more moderators instead of going through all these hoops?

they wouldn’t be hidden in the first place

You’re just saying false flagging doesn’t exist. I very heavily doubt that, since you were a moderator previously, are you sure not one flag has been reversed in your term as a moderator? any other moderator can answer this.

We are not talking about the obvious spam, hate posts, etc. here, they don’t need much consideration from anyone.

We can keep moderators more accountable with 4000 users, previously when flagged posts weren’t hidden, over the 50 cronically online users.

In my tenure as a moderator (which was shorter than @piegames’s), it was fairly common for the mod team to unhide posts flagged by community members. It was less common for the mod team to reverse their own flagging decisions, based on either internal deliberation or an appeal from the person whose comment was hidden. I don’t recall any cases in which we reversed one of our own flagging decisions based entirely or in part on input from a third party.

On the other hand, there were too many cases in which people continued making snarly, intentionally provocative posts because they knew that the flimsy curtain of click-through comment-hiding wasn’t actually stopping their message from reaching its intended targets.