Hey, folks!

I’ve got some problems with screen tearing and NVIDIA and was hoping I

could find some help here.

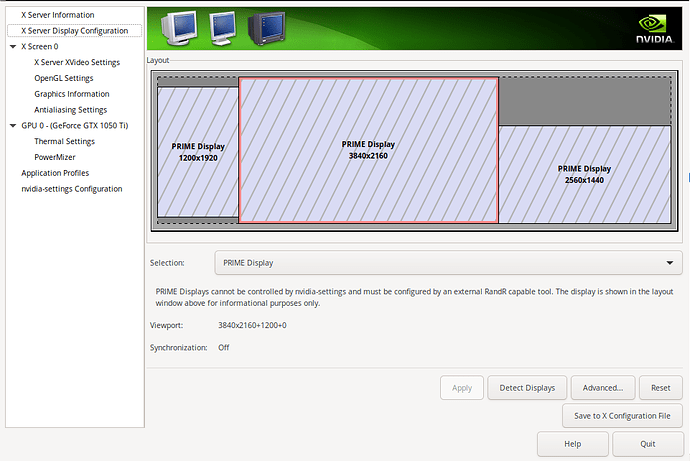

This is concerning a Dell XPS 9570 with a GeForce GTX 1050 Ti card.

In short: I recently started experiencing screen tearing again after

having not had it for about a year. It (probably by coincidence)

seemed to show back up after I started Slack for the first time in

ages. I took trying to fix this as an opportunity to see whether the

NVIDIA drivers would work for my laptop yet (they didn’t previously),

and they did. However, the tearing persists and I think it’s because

I’m not sure how to address it.

I’ve followed NixOS Wiki Nvidia

entry and had a look around forum

threads and the Arch wiki, but feel like I’m still missing some

important fundamental knowledge. I have approximately zero knowledge

of how this works or how to test it, so please bear with me.

I think at the crux of this is that I don’t know how to activate the

card or how to tell whether it’s being used. Using nvtop it lists

only a single process (the X server), and I can’t seem to add more

processes to it. Using the nvidia-offload script, however, at least

glxinfo shows that it’s using the GPU.

Following the Arch wiki on avoiding screen tearing, I tried running

$ nvidia-settings --assign CurrentMetaMode="nvidia-auto-select +0+0 { ForceFullCompositionPipeline = On }"

But whatever I do with nvidia-settings, I either get a completely

blank output (when I’m trying to query things), or when I assign

things (such as in the command above), all I get is:

ERROR: Error resolving target specification '' (No targets match target specification), specified in assignment 'CurrentMetaMode=nvidia-auto-select +0+0 { ForceFullCompositionPipeline = On }'.

I have tried searching the internet for more information on this, but

can’t seem to find out what this means.

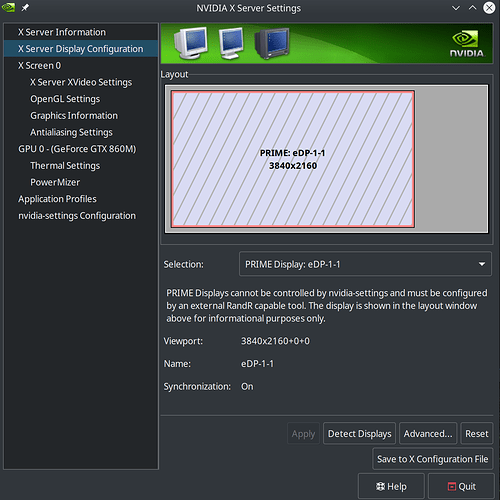

I also seem to be missing some UI elements from the GUI

nvidia-settings applications. At least both the Arch wiki and other

sources reference items (such as an “Advanced” button) that I can’t find.

Here is some system info (based on what was listed in this question):

$ nvidia-smi

Wed Dec 9 09:02:39 2020

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 455.38 Driver Version: 455.38 CUDA Version: 11.1 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 GeForce GTX 105... Off | 00000000:01:00.0 Off | N/A |

| N/A 38C P8 N/A / N/A | 4MiB / 4042MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 1138 G ...-xorg-server-1.20.8/bin/X 4MiB |

+-----------------------------------------------------------------------------+

$ lspci -k | grep -E "(VGA|3D)"

00:02.0 VGA compatible controller: Intel Corporation UHD Graphics 630 (Mobile)

01:00.0 3D controller: NVIDIA Corporation GP107M [GeForce GTX 1050 Ti Mobile] (rev a1)

$ xrandr --listproviders

Providers: number : 2

Provider 0: id: 0x46 cap: 0xf, Source Output, Sink Output, Source Offload, Sink Offload crtcs: 3 outputs: 4 associated providers: 0 name:modesetting

Provider 1: id: 0x26d cap: 0x0 crtcs: 0 outputs: 0 associated providers: 0 name:NVIDIA-G0

$ nix-shell -p nix-info --run "nix-info -m"

- system: `"x86_64-linux"`

- host os: `Linux 5.9.12, NixOS, 20.09.2152.e34208e1003 (Nightingale)`

- multi-user?: `yes`

- sandbox: `yes`

- version: `nix-env (Nix) 2.3.9`

- channels(thomas): `"dhall, nixpkgs-21.03pre251123.b839d4a8557"`

- channels(root): `"nixos-20.09.2152.e34208e1003, nixpkgs-21.03pre251181.dd1b7e377f6"`

- nixpkgs: `/home/thomas/.nix-defexpr/channels/nixpkgs`

$ glxinfo | grep "OpenGL renderer"

OpenGL renderer string: Mesa Intel(R) UHD Graphics 630 (CFL GT2)

$ nvidia-offload glxinfo | grep "OpenGL renderer"

OpenGL renderer string: GeForce GTX 1050 Ti with Max-Q Design/PCIe/SSE2

configuration.nix (relevant sections)

{ config, pkgs, ... }:

let

nvidia-offload = pkgs.writeShellScriptBin "nvidia-offload" ''

export __NV_PRIME_RENDER_OFFLOAD=1

export __NV_PRIME_RENDER_OFFLOAD_PROVIDER=NVIDIA-G0

export __GLX_VENDOR_LIBRARY_NAME=nvidia

export __VK_LAYER_NV_optimus=NVIDIA_only

exec -a "$0" "$@"

'';

in {

boot = {

kernelParams =

[ "acpi_rev_override" "mem_sleep_default=deep" "intel_iommu=igfx_off" "nvidia-drm.modeset=1" ];

kernelPackages = pkgs.linuxPackages_latest;

extraModulePackages = [ config.boot.kernelPackages.nvidia_x11 ];

};

hardware.nvidia.prime = {

offload.enable = true;

nvidiaBusId = "PCI:1:0:0";

intelBusId = "PCI:0:2:0";

};

services.xserver = {

enable = true;

videoDrivers = [ "nvidia" ];

windowManager.exwm = {

enable = true;

};

screenSection = ''

Option "metamodes" "nvidia-auto-select +0+0 {ForceFullCompositionPipeline=On}"

Option "AllowIndirectGLXProtocol" "off"

Option "TripleBuffer" "on"

'';

};

}

Thanks for any input you might have, and if you need to know anything

else, please don’t hesitate to ask!

Cheers.