Coincidentally, I walked away from this computer and had forgotten to plug-it in, came back to find it hibernated but with a very low battery. Plugged it in, gave it a few minutes and it restored with 4 VMs running.

This topic is very interesting to me. I have no idea what the real-world MTBF of modern NVME drives is. I guess there are also time costs to slowing down and crashing! So many variables, I decided to ask a couple of AI engines to see what they would say. The results are below and they were pretty much a list the things we had discussed. Perplexity did recommend something called zswap which I have never heard of. I also like the idea of using a big swap partition containing a swap file so it is easy to adjust the size but still keeps the swap all in one place.

Thanks for the discussion.

Question

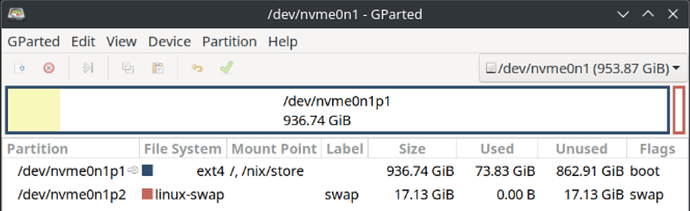

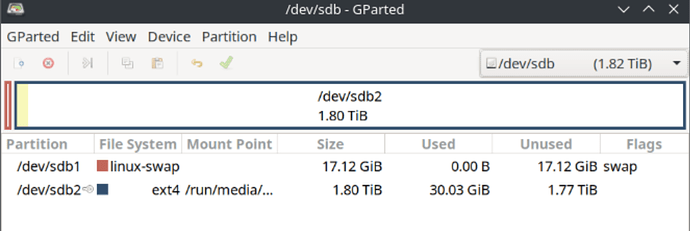

I have a 2023 laptop with 64Gb of 3200Mhz DDR4 memory, a PCIe Gen 4 2TB Samsung 980 Pro SSD and an Intel i7-1260P CPU. The laptop has no dedicated GPU. I run NixOS with QEMU/KVM/libvirt and regularly 12 or more virtual machines mostly with 3Gb of memory running a variety of Operating Systems, including various Linux distributions and up to 2 Microsoft Windows domains. Can you please provide me an analysis of the pros and cons of various swap space configurations for the host, taking into account performance of the whole system, risk of crashes and impacts to the lifespan of the NVME drive? Can you then provide a recommendation of how the swap space should be configured?

perplexity

Based on your system configuration and usage, here’s an analysis of various swap space configurations and a recommendation:

- Pros of larger swap space:

- Increased ability to handle memory-intensive workloads, especially with multiple VMs running

- Reduced risk of system crashes due to out-of-memory errors

- Better support for hibernation, if needed

- Cons of larger swap space:

- Increased wear on the NVMe SSD due to more frequent write operations

- Potential performance degradation if the system relies too heavily on swap instead of RAM

- Pros of smaller swap space:

- Reduced wear on the NVMe SSD

- Potentially faster performance by forcing the system to use RAM more efficiently

- Cons of smaller swap space:

- Increased risk of system crashes or VM failures if memory demands exceed available RAM and swap

- Limited ability to handle unexpected memory spikes

Recommendation: Given your high-performance NVMe SSD (Samsung 980 Pro PCIe Gen4) and substantial 64GB of RAM, I recommend the following swap configuration:

- Set the swap space to 32GB (half of your physical RAM). This provides a good balance between having enough swap for memory-intensive operations and minimizing unnecessary SSD wear

- Adjust the swappiness value to a lower setting, around 10-20 (default is 60). This will encourage the system to use RAM more aggressively before resorting to swap, which is beneficial given your large RAM capacity

- Enable zswap, a compressed RAM cache that can help reduce the frequency of writes to the SSD while still providing swap functionality

- Consider using a swap file instead of a swap partition. This allows for easier resizing if needed in the future

- Place the swap file on a separate NVMe namespace or partition to minimize interference with the host OS and VM storage operations

This configuration should provide a good balance between performance, stability, and SSD longevity. It allows enough swap space to handle memory spikes and prevent crashes, while the lower swappiness and zswap usage will help minimize unnecessary writes to the SSD. The high-performance Samsung 980 Pro SSD should be able to handle the swap operations efficiently when needed. Remember to monitor your system’s memory usage and swap utilization over time. If you consistently see high swap usage, you may need to consider adding more physical RAM or reducing the number of concurrent VMs.

ChatGPT

Certainly! Given your setup and usage scenario, let’s analyze the pros and cons of different swap space configurations for your host system (NixOS), considering performance, system stability, and NVMe drive lifespan:

1. No Swap (Using Only RAM)

Pros:

- No impact on NVMe drive lifespan due to swap writes.

- Avoids potential performance degradation from swap usage.

Cons:

- Risk of out-of-memory (OOM) crashes if physical memory (RAM) is fully utilized.

- Can limit flexibility in handling memory-intensive tasks or spikes in memory usage.

Consideration: With 64GB of RAM, this setup can work well unless you frequently push the limits of your RAM capacity with your virtual machines.

2. Swap on NVMe SSD

Pros:

- Provides additional virtual memory when RAM is fully utilized, potentially preventing OOM crashes.

- Utilizes fast NVMe SSD speeds for swap operations.

Cons:

- Increases wear on the NVMe drive due to swap writes, potentially reducing its lifespan over time (although modern SSDs are quite resilient).

Consideration: The Samsung 980 Pro is a high-performance SSD with good endurance, but frequent swapping could still impact its longevity, especially if your workload involves a lot of write-intensive operations.

3. Swap on a Separate HDD or SATA SSD

Pros:

- Offloads swap operations from the NVMe SSD, preserving its lifespan.

- Provides swap space without impacting the main SSD’s performance.

Cons:

- Slower swap performance compared to NVMe SSD, which might impact VM performance if frequent swapping occurs.

Consideration: This approach can be beneficial if you are concerned about NVMe drive wear or if you prefer to segregate swap operations from your main storage.

Recommendation:

Based on the analysis, here’s a recommendation tailored to your setup:

Configure a moderate-sized swap partition on the NVMe SSD, but consider limiting its use to avoid excessive wear. Given your system’s RAM size and workload (running multiple VMs with varying memory demands), having a small amount of swap space (e.g., 8GB) on the NVMe SSD can act as a safety net for unexpected memory spikes without significantly impacting performance or risking drive longevity.

Additionally, monitor your system’s swap usage regularly. If you find that swap is being used frequently, consider adjusting your VM configurations (e.g., adjusting memory allocations, optimizing resource usage) to reduce the reliance on swap.

Key Points to Keep in Mind:

-

Monitor Swap Usage: Use tools like

top, htop, or vmstat to monitor how much swap is being used regularly. This will help you adjust configurations as needed.

-

NVMe Endurance: While the Samsung 980 Pro is durable, frequent and heavy swap usage can still impact its lifespan over time. Keeping swap usage moderate and within reasonable limits is advisable.

By implementing a cautious approach with a small swap partition on the NVMe SSD, you can balance system stability, performance, and NVMe drive longevity effectively in your virtualization environment on NixOS.