So, I actually do something basically exactly like this, including the TPM2, except I went as far as to add Tailscale to the initrd so I could SSH in and unlock from anywhere in the world. So far it has worked flawlessly.

First question: Do you actually have boot.initrd.systemd.enable set to true? It isn’t by default, meaning a completely different scripted initrd is the default and all your boot.initrd.systemd settings do nothing. AFAIK there’s no way to make ZFS import before LUKS is unlocked with the scripted initrd though, so you’re on the right tracking thinking to use systemd initrd.

Unfortunately there’s a number of things that don’t quite work the way you seem to think they do.

You shouldn’t need this and it wouldn’t work anyway. This causes NixOS to import extra pools in stage 2, after we’ve mounted the root FS and left initrd. I am assuming you’re wanting this ZFS pool for your root FS? If not a lot of what I’m about to say will be the wrong advice  Any ZFS dataset you configure with

Any ZFS dataset you configure with fileSystems e.g. in hardware-configuration.nix will automatically have the code added to import the pool in stage 1 or stage 2 depending on if it’s needed for the core file systems like / and /var.

Similarly, this shouldn’t be strictly necessary because NixOS already makes an import service, unless you prefer to disable that one and have a custom one (which I actually do). But for that you need to disable the default one, and you need to import with the -N flag so that it doesn’t try to mount anything in the initrd file system, and you need to set unitConfig.DefaultDependencies = false; because the default systemd dependencies are almost never correct during stage 1. It also needs to be ordered before sysroot.mount, because that’s the unit that will try to mount the dataset as the directory that will eventually become the root directory of the OS when it transitions to stage 2.

So I’ll share with you the config I’ve been using for a while now, with comments added to attempt to explain it. Beware, it’s long and complicated, but that’s because I’m trying to be extremely careful with it. This is required reading for TPM2 unlocking: Bypassing disk encryption on systems with automatic TPM2 unlock

{ lib, config, utils, ... }: {

boot.initrd = {

# This would be a nightmare without systemd initrd

systemd.enable = true;

# Disable NixOS's systemd service that imports the pool

systemd.services.zfs-import-rpool.enable = false;

systemd.services.import-rpool-bare = let

# Compute the systemd units for the devices in the pool

devices = map (p: utils.escapeSystemdPath p + ".device") [

"/dev/disk/by-id/disk1"

"/dev/disk/by-id/disk2"

"/dev/disk/by-id/disk3"

"/dev/disk/by-id/disk4"

"/dev/disk/by-id/disk5"

];

in {

after = [ "modprobe@zfs.service" ] ++ devices;

requires = [ "modprobe@zfs.service" ];

# Devices are added to 'wants' instead of 'requires' so that a

# degraded import may be attempted if one of them times out.

# 'cryptsetup-pre.target' is wanted because it isn't pulled in

# normally and we want this service to finish before

# 'systemd-cryptsetup@.service' instances begin running.

wants = [ "cryptsetup-pre.target" ] ++ devices;

before = [ "cryptsetup-pre.target" ];

unitConfig.DefaultDependencies = false;

serviceConfig = {

Type = "oneshot";

RemainAfterExit = true;

};

path = [ config.boot.zfs.package ];

enableStrictShellChecks = true;

script = let

# Check that the FSes we're about to mount actually come from

# our encryptionroot. If not, they may be fraudulent.

shouldCheckFS = fs: fs.fsType == "zfs" && utils.fsNeededForBoot fs;

checkFS = fs: ''

encroot="$(zfs get -H -o value encryptionroot ${fs.device})"

if [ "$encroot" != rpool/crypt ]; then

echo ${fs.device} has invalid encryptionroot "$encroot" >&2

exit 1

else

echo ${fs.device} has valid encryptionroot "$encroot" >&2

fi

'';

in ''

function cleanup() {

exit_code=$?

if [ "$exit_code" != 0 ]; then

zpool export rpool

fi

}

trap cleanup EXIT

zpool import -N -d /dev/disk/by-id rpool

# Check that the file systems we will mount have the right encryptionroot.

${lib.concatStringsSep "\n" (lib.map checkFS (lib.filter shouldCheckFS config.system.build.fileSystems))}

'';

};

luks.devices.credstore = {

device = "/dev/zvol/rpool/credstore";

# 'tpm2-device=auto' usually isn't necessary, but for reasons

# that bewilder me, adding 'tpm2-measure-pcr=yes' makes it

# required. And 'tpm2-measure-pcr=yes' is necessary to make sure

# the TPM2 enters a state where the LUKS volume can no longer be

# decrypted. That way if we accidentally boot an untrustworthy

# OS somehow, they can't decrypt the LUKS volume.

crypttabExtraOpts = [ "tpm2-measure-pcr=yes" "tpm2-device=auto" ];

};

# Adding an fstab is the easiest way to add file systems whose

# purpose is solely in the initrd and aren't a part of '/sysroot'.

# The 'x-systemd.after=' might seem unnecessary, since the mount

# unit will already be ordered after the mapped device, but it

# helps when stopping the mount unit and cryptsetup service to

# make sure the LUKS device can close, thanks to how systemd

# orders the way units are stopped.

supportedFilesystems.ext4 = true;

systemd.contents."/etc/fstab".text = ''

/dev/mapper/credstore /etc/credstore ext4 defaults,x-systemd.after=systemd-cryptsetup@credstore.service 0 2

'';

# Add some conflicts to ensure the credstore closes before leaving initrd.

systemd.targets.initrd-switch-root = {

conflicts = [ "etc-credstore.mount" "systemd-cryptsetup@credstore.service" ];

after = [ "etc-credstore.mount" "systemd-cryptsetup@credstore.service" ];

};

# After the pool is imported and the credstore is mounted, finally

# load the key. This uses systemd credentials, which is why the

# credstore is mounted at '/etc/credstore'. systemd will look

# there for a credential file called 'zfs-sysroot.mount' and

# provide it in the 'CREDENTIALS_DIRECTORY' that is private to

# this service. If we really wanted, we could make the credstore a

# 'WantsMountsFor' instead and allow providing the key through any

# of the numerous other systemd credential provision mechanisms.

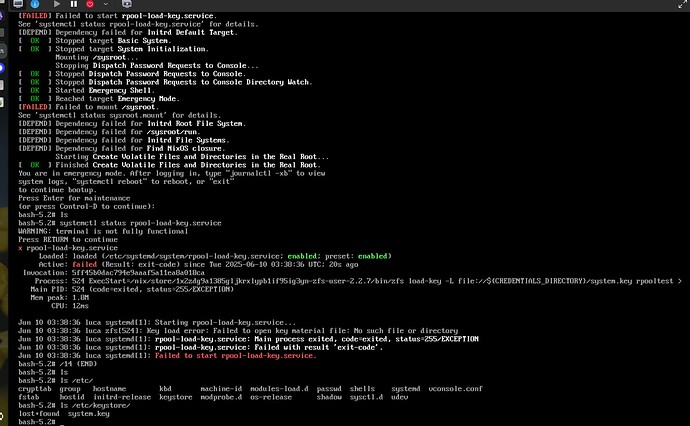

systemd.services.rpool-load-key = {

requiredBy = [ "initrd.target" ];

before = [ "sysroot.mount" "initrd.target" ];

requires = [ "import-rpool-bare.service" ];

after = [ "import-rpool-bare.service" ];

unitConfig.RequiresMountsFor = "/etc/credstore";

unitConfig.DefaultDependencies = false;

serviceConfig = {

Type = "oneshot";

ImportCredential = "zfs-sysroot.mount";

RemainAfterExit = true;

ExecStart = "${config.boot.zfs.package}/bin/zfs load-key -L file://\"\${CREDENTIALS_DIRECTORY}\"/zfs-sysroot.mount rpool/crypt";

};

};

};

# All my datasets use 'mountpoint=$path', but you have to be careful

# with this. You don't want any such datasets to be mounted via

# 'fileSystems', because it will cause issues when

# 'zfs-mount.service' also tries to do so. But that's only true in

# stage 2. For the '/sysroot' file systems that have to be mounted

# in stage 1, we do need to explicitly add them, and we need to add

# the 'zfsutil' option. For my pool, that's the '/', '/nix', and

# '/var' datasets.

#

# All of that is incorrect if you just use 'mountpoint=legacy'

fileSystems = lib.genAttrs [ "/" "/nix" "/var" ] (fs: {

device = "rpool/crypt/system${lib.optionalString (fs != "/") fs}";

fsType = "zfs";

options = [ "zfsutil" ];

}) // {

"/boot" = {

device = "UUID=30F6-D276";

fsType = "vfat";

options = [ "umask=0077" ];

};

};

}

Although some of its length comes from the caution around TPM2 unlocking, I think it’s all broadly appropriate for regular passphrase based unlocking anyway, and does actually seamlessly fallback to working that way when the TPM2 isn’t working. So this works very well even if you don’t want to do the TPM2 stuff.