Hi! ![]()

I wrote https://github.com/rapenne-s/bento/ because I was dissatisfied with the various NixOS deployment tools around. They are way to strict, and either requiring flakes or not compatible with them.

Bento aims at managing a huge amount of NixOS in the wild, so it has been made with robustness in mind to bypass firewalls, configurations can be built on the central management server and served if it is used as a substituters by the clients, and secure as each host can only access its own configuration file, without sacrificing the configuration management for the system administrators who have everything in one repository.

I created a screencast to show the workflow to add a new host: How to add a new NixOS system to Bento deployment - asciinema.org

You can find the rational behind Bento on my blog Solene'%

update 2022-09-09: bento 1.0.0 released! It’s now a single script

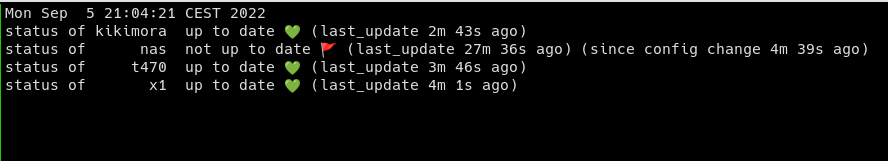

update: you can track the status of remote systems

https://asciinema.org/a/520504

update: you can track the version of the remote systems against what you have locally (thanks to reproducibility!)

machine local version remote version state time

------- --------- ----------- ------------- ----

kikimora 996vw3r6 996vw3r6 💚 sync pending 🚩 (build 5m 53s) (new config 2m 48s)

nas r7ips2c6 lvbajpc5 🛑 rebuild pending 🚩 (build 5m 49s) (new config 1m 45s)

t470 b2ovrtjy ih7vxijm 🛑 rollbacked 🔃 (build 2m 24s)

x1 fcz1s2yp fcz1s2yp 💚 up to date 💚 (build 2m 37s)