A systray agent is already in the todo list, this could be just to notify a reboot is required (if you use nixos-rebuild boot instead of switch) or if they want to update. ![]()

Thanks @NobbZ for chiming in!

Indeed!

Hence, let’s think about readiness and liveness as two states that we need to assert.

In any case, since we’re observing the systemd-scheduler, we probably want to assess these states in function of what sytemd has to tell us.

Furthermore, we want to make a summary statement about a critical set of services (that we call “OS”) rather than probably any specific service.

Although, if that OS hosts services for us, then we also want to assert that state for those services, so the semantic may expand. If I run services on a set of hosts reliably, I wouldn’t try to do that with systemd, though, but rather some sort of data-center scheduler. So we probably don’t need to conflate OS life- & readiness (“the services required for the OS to function as desired”) and workload readiness (“the things I need uptime for”) at this point.

Probably, for the scheduling strategy a n+1 might be ok-ish, but that n+1 might need compounding over a host class. Imagine you require n=3 “blue-class” hosts to satisfy your workload, then you’d have to run n+1 and always can only cycle one spare host until it’s ready and live again.

But here comes the additional twist: when we have production workloads scheduled via a suitable distributed scheduler, we only can continue cycling if that external scheduler gives us life and readiness greenlight for the relevant production services.

So strictly speaking for an automated fleet update to not take down your system at uncontrolled points in time, in addition, it would probably need a foreign scheduler interface that listens for a summary green light from that scheduler to continue cycling with the next host of a given host class.

Not trivial. Especially not in a stateless manner (“choreography”). Such state would have to span the fleet, so we have a distributed state. Enter etcd / consul? ![]()

This is really a bit of a hard nut to crack, especially since the entire NixOS ecosystem somehow tacitly just is like “downtime is cool” in its implicit deployment and operating model. It’s an desktop OS, not a scheduler OS, I get it. But at the same time “Nix for Work” wants to be a thing. This is the big quest of our times fir this community, imho.

I understand what you mean, it totally make sense for servers.

However I mostly target workstations, they just work without dependency on other systems, so I feel it’s less an issue if they don’t update at the same time of other. They are all independent.

I don’t know, but maybe it makes sense to start employing these clearly distinct semantics:

- NixOS for Workstations

- NixOS for Server

Maybe even as a badge. I feel that the general conversation throughout the Nix Ecosystem (not this particular one), lacks clarity and category.

This would be a case for the Doc Team to evaluate / consider in the ongoing Nomenclature effort, though.

What’s the difference between NixOS for workstation and NixOS for server? It’s just NixOS in the end ![]()

Except that if your servers are interconnected, lazy updates are not suitable.

Ha, glad you asked! I just felt the need to embed this in a bigger context w.r.t. ecosystem semantics: NixOS for Workstations vs NixOS for Servers

Since, I’m looking at Nix 90% with the “for Business” lense, I’ve somehow felt that mismatch in idealized and purported usage scenario tacitly happening in the past, so I thought this may merit a different framing.

This was just a first take, but I hope that framing goes somewhat in the right direction and leads us to a marginal ecosystem improvement, so the creator will.

This make me realize it should be the reason why I named bento “a deployment framework” in this thread title, because it’s something you can build upon.

I’d not expect any business to use it as this ![]()

![]() but as a foundation to build something matching their requirements. They could throw the code entirely, and just keep the idea if this is enough for them

but as a foundation to build something matching their requirements. They could throw the code entirely, and just keep the idea if this is enough for them ![]()

Instead of relying on sending configurations files through sftp, and run nixos-rebuild flakes / not flakes, this involve a lot of conditionals, and I’d like to make things simpler.

In the current state, it’s also not possible to use a single flakes file to manage all hosts. This is going to change.

I found a way to transfer a nixos configuration file as a single file, this will be transmitted over sftp, so I’ll be able to tell if a client is running the same derivation that is currently on the sftp, and solve a lot of problems. I’ll be able to get ride of nixos-rebuild too, and the client won’t know if it’s using flakes or not.

create a derivation file for the system, using flakes. I still need to figure how to do this without flakes

DRV=$(nix path-info --json --derivation .#nixosConfigurations.bento-machine.config.system.build.toplevel | jq '.[].path' | tr -d '"')

make the result of $DRV available to the remote machine

nix-build $DRV -A system (or nix build $DRV)

sudo result/bin/switch-to-configuration switch (or boot)

edit: getting the derivation path using non flakes

nix-instantiate '<nixpkgs/nixos>' -A config.system.build.toplevel -I nixos-config=./configuration.nix

Now I just need to ensure the result only contain what’s required for this host.

I may have been a bit too enthusiastic, because it doesn’t seem to do what I thought ![]()

That’s potentially a lot of headache avoided, thank you very much ![]()

I’ve been hitting issues with nixos-rebuild, it’s interesting because the command doesn’t report correctly it’s failing.

Bento is now reporting issues like not enough disk space, but ultimately I need it to report the current version of the system to compare with what we have locally ![]()

Is this asking for help?

Anyways, just in case it’s (tangentially) useful: https://github.com/input-output-hk/bitte/blob/f452ea2c392a4a301c1feb20955c7d0a4ad38130/modules/terraform.nix#L191-L194

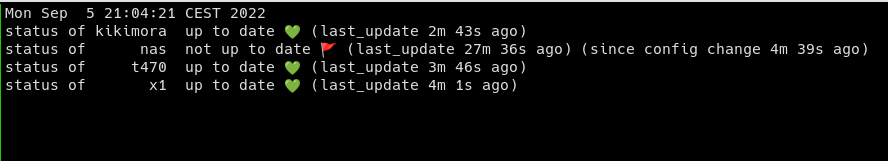

I added the feature to compare the expected NixOS version and the last reported NixOS version

machine local version remote version state time

------- --------- ----------- ------------- ----

kikimora 996vw3r6 996vw3r6 💚 sync pending 🚩 (build 5m 53s) (new config 2m 48s)

nas r7ips2c6 lvbajpc5 🛑 rebuild pending 🚩 (build 5m 49s) (new config 1m 45s)

t470 ih7vxijm ih7vxijm 💚 up to date 💚 (build 2m 24s)

x1 fcz1s2yp fcz1s2yp 💚 up to date 💚 (build 2m 37s)

The state “sync pending” means we updated the files used by Bento (mostly 2 shell scripts to download and run nixos-rebuild), and “rebuild pending” when the hash differs, this one implies a rebuild will be done on the remote NixOS.

It’s not perfect, but I finally found how to use systemd sockets to run something, there are two methods, one with a socket and a .service with the same name that should listen on network. The other is using a socket and a template ending with a @ in the name, and using Accept=yes in the socket. I needed the latter.

systemd.sockets.listen-update = {

enable = true;

wantedBy = ["sockets.target"];

requires = ["network.target"];

listenStreams = ["51337"];

socketConfig.Accept = "yes";

};

systemd.services."listen-update@" = {

path = with pkgs; [systemd];

enable = true;

serviceConfig.StandardInput = "socket";

serviceConfig.ExecStart = "${pkgs.systemd.out}/bin/systemctl start bento-upgrade.service";

serviceConfig.ExecStartPost = "${pkgs.systemd.out}/bin/journalctl -f -n 10 --no-pager -u bento-upgrade.service";

};

if you open http://localhost:51337 in the web browser, it starts the update process and shows the journal log of the update service ![]()

Bento ![]() now supports rollback! The fleet display shows the rollback status, so you can easily be aware that something went wrong.

now supports rollback! The fleet display shows the rollback status, so you can easily be aware that something went wrong.

It’s also getting closer to work as a self containing script that doesn’t need to be store in the top level of repo. It’s still shell script only (not even bash), like 400 lines. I may rewrite it into somewhere else at some point.

Also, I’d like it to be able to use a single flakes with many hosts in it, instead of putting a flake per host directory. Ideally, it should support both.

A while ago, I started GitHub - divnix/hive: The secretly open NixOS-Society which deals with a certain folder layout for organizing users and their hosts.

It would be nice if bento would have only a weak opinion on the flake layout.

The flake layout is prime screen assets and in order to limit boilerplate bloat, I enjoy if a vertical framework considers horizontal integration points (i.e. integration points that aren’t anchored to a particular vertical schema).

I’d then like to try an integration upon reviving that project (for workstations ![]() ).

).

The next step is to handle flakes with multiple machines.

The current layout is to have the configuration file of each host in its own directory. In the future, for directories with a flakes.nix files, bento will look for machines with a configuration file, and will iterate over the hosts list, instead of using the directory name for the machine.

This doesn’t break setups with non-flakes (it’s still experimental!), we can still use separate directories with their own flakes / non flakes, and we can have a single directory with a flake and many hosts, and handle that.

I need to hack a bit now ![]()