Last week there was a period of 14 hours where the mechanism of a serious security vulnerability in Nix 2.24 was publicly known but no patch available. The following reconstructs what led up to that, and lists the conclusions Nix maintainers (@edolstra @tomberek @Ericson2314 @roberth @fricklerhandwerk) draw from the incident.

Summary of events

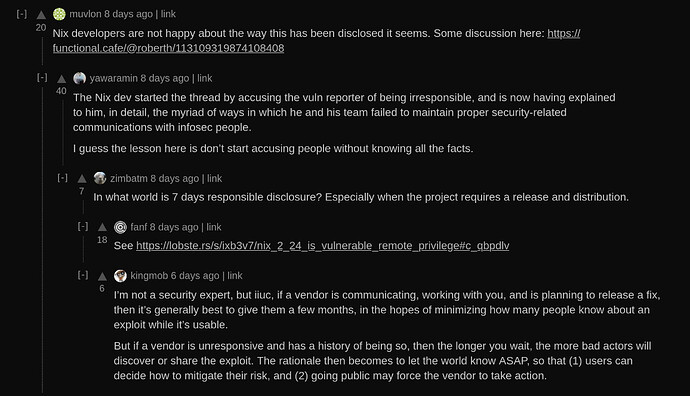

Nix maintainers were first informed of the vulnerability on Friday 2024-08-30 on Matrix by @puckipedia, with an advance warning on GitHub by @jade. @tomberek got in touch with @puckipedia the same day, started working on the issue over the weekend, and produced an analysis and a patch. @edolstra started adding tests on Wednesday 2024-09-04.

Due to an omission, @puckipedia did not get access the GitHub advisory until Saturday 2024-09-07. In the Matrix exchange that revealed the problem, @fricklerhandwerk also communicated that the fix release was planned for the coming Monday.

On Monday 2024-09-09 the patch was ready, but the team decided to wait for feedback from @puckipedia since she had alluded to another possible attack without expanding on details.

In the European night to Tuesday 2024-09-10, @puckipedia publicly disclosed the vulnerability. Since no one except @edolstra could cut a release and @edolstra couldn’t be reached, @roberth and @cole-h redirected the installer URL to the previous version as a temporary measure. By European noon the patch was released together with an announcement.

Complete timeline

This timeline was sent for review of factual correctness to @puck, @jade, and @ktemkin.

Friday 2024-08-30

-

03:15 CEST @jade posts a comment on the Nix 2.18 → 2.24 pull request with a warning not to merge yet.

-

10:31 CEST @roberth notifies the team.

-

14:08 CEST @puckipedia posts a message on the Nix development Matrix room describing the vulnerability and setting a deadline to disclose it.

Okay, so. As mentioned on GitHub yesterday, I have found a vulnerability in Nix 2.24 that allows any untrusted user or substituter (even without trusted signing key) to potentially escalate to root on macOS and some very quirky Linux setups. Due to the severity of this bug, and my past experiences trying to disclose vulnerabilities to Nix, I am considering disclosing this vulnerability publicly in one week (seven days). Any Nix team member may message me for the details of the vulnerability.

-

19:42 CEST @Mic92 from the NixOS security team checks with @tomberek if the issue is being addressed and offers help if needed.

-

21:15 CEST @tomberek picks up communication with @puckipedia and leaves a note in the public Matrix room.

@puckipedia shares the exploit with @tomberek. @tomberek suspects the reason to be recent the file system accessor refactoring by @Ericson2314. They discuss the issue and identify three exploit mechanisms. @tomberek promises to work on it over the weekend.

-

23:21 CEST @tomberek shares his first assessment with the team.

Sunday 2024-09-01

-

8:18 CEST @tomberek shares a patch with the team.

-

9:13 CEST @tomberek reports back to @puckipedia to have found a viable solution approach.

Monday 2024-09-02

-

14:00 CEST Regular Nix maintainer meeting begins. @tomberek is not available this time.

@roberth and @edolstra work on other things due to lack of context.

@fricklerhandwerk writes down a checklist for releasing security patches to ensure a smoother process this time around. -

20:56 CEST @tomberek creates the GitHub security advisory, tags @puckipedia as reporter. He misses that “reporter” (unlike “collabator”) doesn’t grant permissions to read, however. Tom messages Puck with the link.

-

21:13 CEST @puckipedia lets Tom know that she cannot access the GitHub link. She also brings up a possible fourth exploit variant, wondering if the fix addresses it.

-

21:27 CEST @tomberek acknolwedges the possible fourth attack, but does not react to Puck saying she cannot access the advisory.

Wednesday 2024-09-04

-

21:00 CEST The Nix maintainer team meets.

@tomberek and @edolstra discuss the solution approach.

@edolstra pushes the first patches during the meeting, with more following the next day.

Saturday 2024-09-07

-

20:53 CEST @emily in the public Nix developmenmt channel asks for updates on the vulnerability on the public Matrix room.

@fricklerhandwerk replies the release of the fix is planned for the coming Monday, and that @edolstra is working on it.

@puckipedia (again, this time replying in the public channel) points out that she cannot access the advisory. @fricklerhandwerk adds her to the list of collaborators, granting her access.

Monday 2024-09-09

-

14:00 CEST The Nix maintainer team meets to prepare the release of the fix.

-

14:15 CEST @tomberek reaches out to @puckipedia asking if the fix addresses the issues, and in particular the possible fourth exploit variant, but gets no reply.

During the meeting, the team decides to postpone publishing the fix to wait for clarification from Puck that the fixes are adequate.

-

21:21 CEST @puckpedia publically discloses the vulnerability

Tuesday 2024-09-10

-

0:40 CEST @roberth notifies the team that the vulnerability has been exposed.

@roberth and @fricklerhandwerk meet in a call.

Both try to reach out to @edolstra, since he’s the only one with credentials to make a release.

As an emergency measure, they open pull requests to change offical installer URLs to a previous version. -

1:41 CEST

@fricklerhandwerk reaches @grahamc, who gets @cole-h into the call – he has access to infrastructure.

The three decide to downgrade the installer, since the earliest possible release would land in the European morning even if @edolstra appeared immediately.@fricklerhandwerk tries to reach @tomberek to get details on the status of the fix.

-

2:00 CEST @cole-h has redirected the installer URL to 2.23.3.

@Ericson2314 joins the call.

-

2:18 CEST @roberth opens the pull request to remove Nix 2.24 from Nixpkgs.

The group tries to reconstruct the sequence of events.

-

3:07 CEST @cole-h skips ofborg CI and merges the pull request.

-

10:00 CEST @fricklerhandwerk starts preparing a public statement.

-

12:22 CEST @raboof posts an announcement on Discourse. @fricklerhandwerk follows up with details.

-

13:00 CEST @edolstra backports the fix to 2.23 and kicks off the build.

-

14:40 CEST @roberth and @fricklerhandwerk discuss with @tomberek if the patch is a sufficient mitigation and decide to move forward rather than recommending to downgrade.

The point release lands, @edolstra provides updates to the Discourse announcement, recommending to reinstall Nix standalone to get 2.24.6 and downgrade to 2.23.3 if using Nixpkgs.

Wednesday 2024-09-11

- @Mic92 brings Nix 2.24 back to Nixpkgs with the fix applied. @fricklerhandwerk updates the Discourse announcement to recommend upgrading to 2.24.6 inside Nixpkgs.

Assessment

There seems to have been no attempt to reach out to Nix maintainers or the NixOS security team before the public posts on GitHub and Matrix.

Since @tomberek and @edolstra were actively working on the issue, there was no sense of urgency among other team members. The conclusion of the Wednesday meeting that the patch was almost done contributed to that. Before that meeting, a comprehensive discussion of risks among team members did not happen.

We did not manage to aggregate all information consistently in one communication channel, which otherwise may have prevented misunderstandings

We probably should have communicated more clearly with @puckipedia that we’re waiting for a reply before releasing the patch. Moving the deadline for disclosure, given the patch was actively worked on, was not explicitly negotiated.

Without the uncoordinated disclosure our mitigation and release process would have worked as intended.

Mitigations

As measures to prevent such incidents in the future, Nix maintainers will:

-

Update the security protocol and exercise the updated version

While we had an informal workflow for releasing security fixes, this was only written down recently. We did not have a checklist for responding to reports though, and little shared experience to build upon.

Nix vulnerabilities must result in a single line of communication between the reporter and the entire Nix maintainer team. Then communication such as questions on the validity of the patch, and notices of public disclosure, will not be missed.

-

Fully automate the release process

This was not done previously because the setup worked well enough.

Automating this will cut down the time to delivery, and allows any team member to complete the release when needed.