edit: sorry, misunderstanding.

to jeff-hykin, waves flow from provider to consumer. (“top-down pinning with controlled propagation”)

to me, expensive consumers should pin their dependencies. (“bottom-up pinning”)

(i assume that only few packages are expensive consumers = long build times)

“bottom-up pinning”

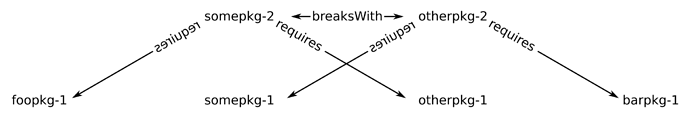

“multversion” pattern

somepkg-2 and otherpkg-2 are NOT compatible with each other

but we want to have the newest version of both

solution: make more use of the “multversion” pattern

= maintain multiple versions of one package in one version of nixpkgs

= give more packages the “privilege”

to pin their dependencies

to fix breakages (or to prevent expensive rebuilds)

these newest versions “lead” the waves (aka dependency trees)

challenge:

python env’s allow only one version per package

→ only one scope/env

compare:

node allows nested dep-trees by default

→ different nodes can use different versions of the same package

→ every node has it’s own scope/env

again: Allowing Multiple Versions of Python Package in PYTHONPATH/nixpkgs

the goal is to pin transitive dependencies to old versions

while allowing to import new versions in the root scope

edit: on the python side, this “one package, one version” approach is considered a feature (not a bug), as this allows passing data between libraries. when using different versions of one package, we must convert data formats on runtime, which is slow

I’d say its it’s much more common than not that when two packages depend on one or more of the same packages deeper in the stack (Numpy, SciPy, Pandas, Matplotlib, Cython, Sympy, xarray, Dask, etc), there’s some form of direct or indirect data interchange, or other cross-dependency between at least one pair of them, if not most. In particular, when they are used, numpy arrays, pandas dataframes, xarray objects are routinely exchanged, and code compiled with different Cython versions (if they actually merited a hard dependency non-overlap) may well be ABI-incompatible and cause a C-level hard crash.

As such, it seems likely that this will further break as many packages as it will fix, and in ways that can be far harder to debug and recover from than a simple dependency conflict on installation, this does not really seem to be a viable solution, relative to other strategies.