Hello! I’m a bit late to the party…

I, too, have been trying to participate in maintaining CUDA packages lately. In fact, they are almost exactly the part of nixpkgs, that I’d like to discuss here, and the part that (this time) brought to the surface many of the pain points that @samuela mentions. At risk of going off-topic, I will try to fill in some details.

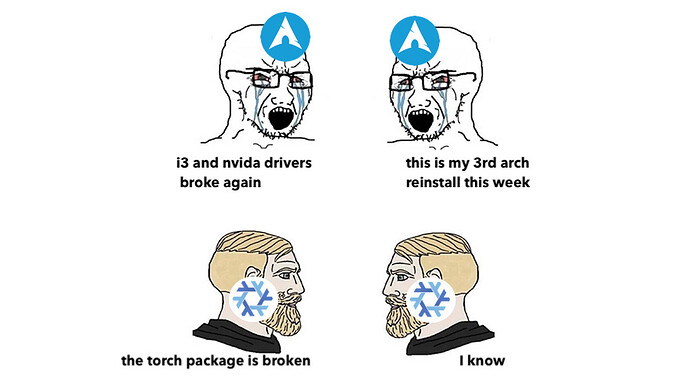

The context is that nixpkgs packages a lot of complex “scientific computing” software that is, for practical purposes, most commonly deployed with unfree dependencies like CUDA (think jax, pytorch or… blender). In fact, with a bit of work Nix appears to be a pretty good fit for deploying all of that software. All of the same packages are as well available through other means of distribution, like python’s PyPi, conda, or mainstream distributions’ repositories. Most of the time they will “just work”, you’ll be getting tested pre-built up-to-date packages. Except they break. For all sorts of reason. One python package overwrites files from another python package and all of a sudden faiss cannot see the GPU anymore. Fundamentally, with Nix and nixpkgs one could implement everything that these other building-packaging-distribution systems do, but have more control and predictability. Have fewer breaks.

That is theory. The practice is that nixpkgs, for known reasons, hasn’t got continuous integration running for CUDA-enabled software. The implication is that even configuration and build failures go about invisible for maintainers, leave alone integration failures, or failures in tests involving hardware acceleration. This also means eventual rot in any chunks of nix code that touch CUDA. It probably wouldn’t be too far from the truth to say that the occasionally partially-working state of these packages (their CUDA-enabled versions, that is) has been largely maintained through unsystematic pull requests from interested individuals, looked after by maintainers of adjacent parts of nixpkgs.

One attempt to address this situation or, rather, an ongoing exploration of possible ways to address it was the introduction of @NixOS/cuda-maintainers, called for by @samuela. It is my impression so far that this has been an improvement:

- this introduced (somewhat) explicit responsibility for previously un-owned parts of nixpkgs;

- we started running builds (cf. this) and caching results (cf. cuda-maintainers.cachix.org and many thanks to @domenkozar!) for the unfree sci-comp packages on a regular basis, which means that related regressions are not invisible anymore;

- in parallel, @samuela is running a collection of crafted integration tests, in a CI that tries, on schedule, to notify authors about merged commits that introduce regressions, cf. nixpkgs-upkeep;

- three previous items made it safe enough to start slowly pruning the outdated hacks and patches in these expressions, and even to perform substantial changes to how CUDA code is organized overall (notably, the introduction of the

cudaPackages with support for overrideScope'; many thanks to @FRidh!)

- working in-tree also has the additional benefit that the many small fixes, extensions, and adjustments that people do in overlays can start migrating upstream, and might even reflect in how downstream packagers handle cuda dependencies when they first introduce them

Essentially, this is precisely the kind of initiative, that @7c6f434c suggests: we target a well-scoped part of nixpkgs, and try to shift the status quo from “this is consistently broken” to “mostly works and has users”.

Obviously, there are many limitations. First of all, we don’t have any dedicated hardware for running those builds and tests, which means our current workflow simply isn’t sustainable: it exists as long as we are personally involved. Lack of dedicated hardware also implies that it’s simply infeasible for us to build staging (we’ve tried). In turn, this implies that we can only address regressions after the fact, when they have already reached master - one of @samuela’s concerns. This gets, however, worse: there’s no feedback between our fragile constantly-changing hand-crafted CI and the CI of nixpkgs. Thus when regressions have reached master, there’s nothing stopping them from flowing further into nixos-unstable and nixpkgs-unstable! Unless the regressions also affect some of the selected free packages, they’ll be automatically merged into the unstable branches. This problem is not hypothetical: for example many of regressions caused by gcc bump took longer to address for cuda-enabled packages, than for the free packages.

One conclusion is that our original implicit goal of keeping the unstable branches “mostly green” was simply naive and wrong, and we can’t but choose a different policy. A different policy both for maintaining and for consuming these unfree packages: I’ve tried for a while (months) to stay at the release branch. I had to update to nixpkgs-unstable because the release had too many things broken, that we’ve already fixed in master, but even regardless: the release branch has (for our purposes) too low a frequency, missing packages, missing updates. My understanding is that many people (@samuela included) treat nixpkgs-unstable as a rolling release branch. From the discussion in this thread, this interpretation appears to be not entirely correct, but maybe it’s what we need. One alternative workflow we’ve considered (but haven’t discussed in depth) for cuda-packages specifically is maintaining and advertising our own “rolling release” branch, that we would merge things into only after checking against our own (unfree-aware and focused) CI. Perhaps this is also where merge-trains could be used to save some compute.

The complexity of building staging and even master (turns out that when you import with config.cudaSupport = true or override blas and lapack you trigger the whole lot of rebuilds) begs further: do we have to build that much?

There might be a lot of fat to cut. One thing we’ve discovered from the inherited code-base, for example, is that the old runfile-based cudatoolkit expression, whose NAR is slightly above 4GiB of mass, has a dependency on fontconfig and alsa-lib, among other surprising things. The new split cudaPackages don’t have this artifact, but the migration is still in process and we do depend on the old cudatoolkit. That’s very significant. This means that everytime fontconfig, or alsa-lib, or unixODBC, or gtk2, or the-list-goes-on is updated: we have to pump in additional 4GiB into the nix store, we have to rebuild cudnn and magma, we have to rebuild pytorch and tensorflow, we have to rebuild jaxlib, all of which fight in the super heavyweight!

The issue is way more systematic, however, than just cuda updating too often. These same behemoth packages, pytorch and tensorflow, through a few levels of indirection have such comparatively small and high-frequency dependencies as pillow (an image-loading package for python that’s only used by some utils modules at runtime), and pyyaml, and many more. Many of these are in propagatedBuildInputs, some are checkInputs, but most of them are never ever used in buildPhase and cannot possibly affect the output. What they do is they signal a false-positive and cause a very expensive rebuild. On schedule. Is this behaviour inherent and unavoidable for any package set written in Nix? Obviously not

I suspect one could, if desired, write “a” nixpkgs that would literally be archlinux, and rebuild just as often (which is probably rather infrequent, compared to “the” nixpkgs), just by introducing enough boundaries and indirections. Split phases in most packages. Not that this would be useful per se, it’s interesting as the opposite extreme, the other end of the spectrum than what current nixpkgs is. And maybe we should head toward somewhere inbetween: rebuild build results could have actually changed, test when test results could.

This brings me to the “nixpkgs is too large part”. I guess it’s pretty large. The actual problem could be, however, that too many things depend on too many things. I’m new to Nix, and just a year ago I had so many more doubts and questions about decisions made in nixpkgs, not least of them the choice of monorepo. Now I actually began to respect the current structure: it might be really boosting synchronization between so many independent teams and individual contributors, helping the eventual consistency. When a change happens, the signal propagates to all affected much sooner, than it probably ever could with subsystems in separate flakes. I don’t think we need to change this. Keeping the “signal” metaphor, I think we need to prune and hone the centralized nixpkgs that we have so as to reduce the “noise” like these false-positives about maybe-changing outputs. We need better compartmentalization in the sense of how many links there are between packages (that’s common sense), but that does not necessitate splitting nixpkgs into multiple repositories

I like the idea about automated broken markers (they save compute, and they spread the signal that a change is needed). I also like the idea of integrating our CI into the status checks. Obviously, for that to happen the unfree CI must be stabilized first, and we must find a sustainable (and scalable) source of storage and compute for it. I don’t think it impossible at all that build failures even for unfree packages might be integrated as merge blockers in future. In fact, I think it inevitably must happen as Nix and nixpkgs grow, and new users come with the expectations they have from other distributions: these parts of nixpkgs will see demand, and maintaining them “in the blind” is impossible. It’s not happening overnight of course. As pointed out by others, there are bureaucracy and trust issues, there are even pure technical difficulties: even if we came up with an agreeable solution, it’s just a lot of work to build a separate CI that wouldn’t contaminate what’s expected to be “free”, and integrate that with existing automated workflows.