Hi everyone!

Summary

Currently in nixpkgs, every module accessing a shared resource must implement the logic needed to setup that resource themselves. This leads to a few issues and subpar end user experience, the first one springing to mind is tight coupling between modules.

I propose a solution to this issue that is fully backwards compatible and can be incrementally introduced into nixpkgs. These two properties are very important to me and IMO critical to the success of adoption of this RFC.

In this (pre) RFC, I will:

- Provide a motivating example.

- Identify the core issue in the example, generalize it and enumerate all related downstream issues and drawbacks.

- Provide a solution and show how it solves the issues.

- Conclude with defining two contracts and show their usage.

Motivation

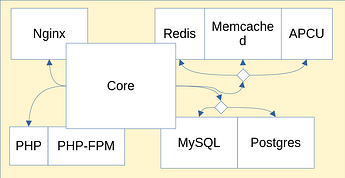

As a motivating example, I’d like to take the Nextcloud module service. This service:

- configures Nextcloud, which is the core of the module;

- sets up Nginx as the reverse proxy;

- sets up the PHP + PHP-FPM stack;

- lets the user choose between multiple database and sets it up;

- lets the user choose between multiple caches and sets it up.

Now, all the code to do this is located in the Nextcloud module linked above. There is no abstraction over Nginx, the databases or the caches. The issues I identified with this are quite common in the software world:

- This leads to a lot of duplicated code. If the Nextcloud module wants to support a new type of database, the maintainer of the Nextcloud module must do the work. And if another module wants to support it too, the maintainers of that module cannot re-use easily the work of the Nextcloud maintainer, apart from copy-pasting and adapting the code.

- This also leads to tight coupling. The code written to integrate Nextcloud with the Nginx reverse proxy is hard to decouple and make generic. Letting the user choose between Nginx and another reverse proxy will require a lot of work.

- There is also a lack of separation of concerns. The maintainers of a service must be experts in all implementations they let the users choose from.

- This is not extendable. If the user of the module wants to use another implementation that is not supported, they are out of luck. The only way, without forking nixpkgs, is to dive into the module’s code and extend it with a lot of

mkForce, if at all possible, and that is not sub-optimal experience. - Finally, there is no interoperability. It is not currently possible to integrate the Nextcloud module with an existing database or reverse proxy or other type of shared resource that already exists on a non-NixOS machine.

Detailed Design

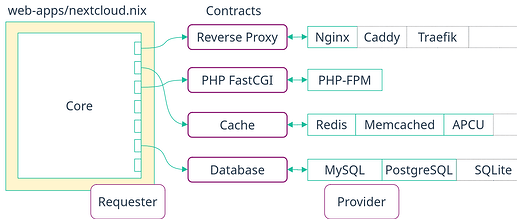

The solution I propose is also known to the software world, it is to decouple the usage of a feature from its implementation. The goal is to make this nextcloud.nix file mostly about the core of the module and offload the rest.

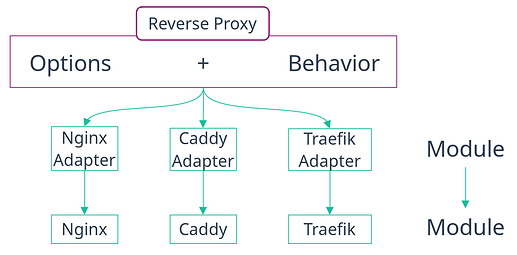

To make this happen, we need a new kind of module, a module orthogonal to existing modules and services and which acts as a layer between a requester module (Nextcloud) and a provider module (Postgres, Nginx, Redis, etc.). I called this layer a contract. In practice, it is an option type coupled with an intended behavior enforced with generic NixOS tests.

The requester module adheres to a contract by providing a new option with the correct type (with helper functions, see implementation below). The contract specifies what options the requester can set - the request.

The provider module adheres to a contract by accepting the options set by the requester and sets options to define the result, which are also specified by the contract.

For provide modules, the easiest for incremental adoption will be to add a small layer on top of transitioning packages. That layer will just translate the options for the contract to options already defined for that module. With time, maybe we’ll get to merge that layer into the service directly.

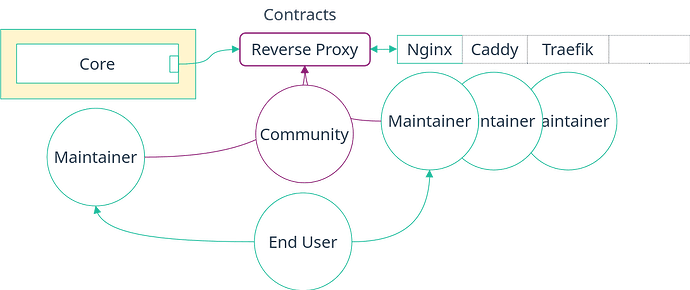

If we come back to the Nextcloud example and zoom in on the Reverse Proxy contract, we can identify the actors responsible for each part.

Introducing this decoupling in the form of a contract allows:

- Reuse of code. Since the implementation of a contract lives outside of modules using it, using the same implementation and code elsewhere without copy-pasting is trivial.

- Loose coupling. Modules that use a contract do not care how they are implemented as long as the implementation follows the behavior outlined by the contract.

- Full separation of concerns. Now, each party’s concern is separated with a clear boundary. The maintainer of a module using a contract can be different from the maintainers of the implementation, allowing them to be experts in their own respective fields. But more importantly, the contracts themselves can be created and maintained by the community.

- Full extensibility. The final user themselves can choose an implementation, even new custom implementations not available in nixpkgs, without changing existing code.

- Incremental adoption. Contracts can help bridge a NixOS system with any non-NixOS one. For that, one can hardcode a requester or provider module to match how the non-NixOS system is configured. The responsibility falls of course on the user to make sure both system agree on the configuration. But then, the NixOS system can be deployed without issue and talk to the non-NixOS system.

- Last but not least, Testability. Thanks to NixOS VM test, we can ensure each implementation of a contract, even custom ones written by the user outside of nixpkgs, provide required options and behaves as the contract requires thanks to generic NixOS tests.

Examples and Implementation

I’d like to let you know first that this idea went through a lot of iterations on my part. There are some complicating factors, some due to how the module works, some due to how the documentation system works.

In the end, I landed on an implementation using structural typing to distinguish between contracts. I find it is quite nice for the users to use, and I mean those requesting or providing a module and the end user who needs to do the plumbing. The incidental complicated parts are hidden behind some functions.

That being said, I did not spend time trying to make the error messages look good. That’s definitely one area of improvement.

To explain the implementation, I’ll first go through an example, from the perspective of the users I describe in the previous paragraph. Afterwards, I’ll go through the code in a different order. Hopefully having both perspectives will paint a good picture of why the implementation is the way it is.

Files Backup Contract

Requester Side

To backup the files of the Nextcloud service, currently users must know those files live in the directory provided by services.nextcloud.dataDir and they must configure the backup job to have the correct user "nextcloud" (which is hardcoded) to be able to access those files.

The contract, as said above is a coupling between options and an expected behavior. The options for this contract are:

user = mkOption {

description = "Unix user doing the backups.";

type = str;

example = "vaultwarden";

};

sourceDirectories = mkOption {

description = "Directories to backup.";

type = nonEmptyListOf str;

example = "/var/lib/vaultwarden";

};

excludePatterns = mkOption {

description = "File patterns to exclude.";

type = listOf str;

};

hooks = mkOption {

description = "Hooks to run around the backup.";

default = {};

type = submodule {

options = {

beforeBackup = mkOption {

description = "Hooks to run before backup.";

type = listOf str;

};

afterBackup = mkOption {

description = "Hooks to run after backup.";

type = listOf str;

};

};

};

};

The goal with this contract is to capture the essence of what it means to backup files in most cases. Maybe this is not enough for some peculiar cases and we’ll need a superset contract of some sort. This has been enough for me so far to backup Nextcloud, Jellyfin, Vaultwarden, LLDAP, Deluge, Grocy, Forgejo, Hledger, Audiobookshelf and Home-Assistant.

The files backup contract allows the Nextcloud module to express how to back it up in code by exposing a backup option (the name does not matter but should be indicative of the contract) which is a submodule and whose fields are given values by the Nextcloud module using default:

backup = lib.mkOption {

description = ''

Backup configuration.

'';

default = {};

type = lib.types.submodule {

options = contracts.backup.mkRequester {

user = "nextcloud";

sourceDirectories = [

cfg.dataDir

];

excludePatterns = [".rnd"];

};

};

};

Surprise! You didn’t know you must exclude the .rnd file from the backup? It’s actually not a file. It can’t be backed up. I discovered this the hard way by having my backup fail one day. But now, nobody needs to suffer anymore since we can write this information in code!

We can see the usage of a function contract.backup.mkRequester which produces a set of fields that gets plugged into the options field of a submodule. This function forces the maintainer of the requester module to only give fields relevant for the backup contract. It also correctly sets up default and defaultText correctly. This was actually hard to get right to satisfy the documentation tooling so the function helps quite a lot here.

The full definition of the requester part of the contract can be found here. You’ll see the contract provides a mkRequest function which is in turned plugged in here to produce the final contract and this mkRequester function. This double layer of functions was again useful to get an uniform structure for all contracts and to please the documentation tooling. Rendered documenation can be seen here.

Provider Side

Now that we have something describing how to be backed up, we need something that backs that thing up. This is the role of a provider module. Here, I’ll use a layer I wrote that’s above the original nixpkgs Restic module that exposes the options of the contract. Code is here and I copied the snippet hereunder:

let

repoSlugName = name: builtins.replaceStrings ["/" ":"] ["_" "_"] (removePrefix "/" name);

fullName = name: repository: "restic-backups-${name}_${repoSlugName repository.path}";

in

// ...

instances = mkOption {

description = "Files to backup following the [backup contract](./contracts-backup.html).";

default = {};

type = attrsOf (submodule ({ name, config, ... }: {

options = contracts.backup.mkProvider {

settings = mkOption {

description = ''

Settings specific to the Restic provider.

'';

type = submodule {

options = commonOptions { inherit name config; prefix = "instances"; };

};

};

resultCfg = {

restoreScript = fullName name config.settings.repository;

restoreScriptText = "${fullName "<name>" { path = "path/to/repository"; }}";

backupService = "${fullName name config.settings.repository}.service";

backupServiceText = "${fullName "<name>" { path = "path/to/repository"; }}.service";

};

};

}));

};

So here also, we have this other mkProvider function which produces a set of options that gets plugged into a submodule. Here though, the exposed instances type is an attrsOf, and we use the name and config from the submodule to configure the defaults of the contract. Getting this right was messy to figure out, but it’s pretty powerful in the end. We can reference other part of the config to generate the default values as long as we provide a “*Text” version which is hardcoded. The rendered documentation can be found here.

Compared to the mkRequester function above, this function actually takes in a nested attrset. The resultCfg is the part which configures the result of the provider module. For a files backup contract, the provider module must provide a systemd service that does the backup and an executable that can rollback to a previous version. It’s a pretty loose definition but I didn’t spend too much time on enforcing some precise behavior for the executable.

There’s also the settings field which is essentially a pass-through option that allows one to define any other options not defined in the contract but which are still necessary to setup the provider correctly.

The config part of the module, like stated above, just does the translation between this contract world and the actual options defined by the nixpkgs Restric module. It can be seen here. I won’t copy it here because it’s lengthy and doesn’t add any value related to contracts.

End User Side

Finally, we need the end user to setup a Restic backup job and plug it in the correct option in Nextcloud. The complete example snippet can be found here but in essence it looks like this:

shb.restic.instances."nextcloud" = {

request = config.shb.nextcloud.backup.request;

settings.repository = "/srv/backups/restic/nextcloud";

};

I use the shb prefix here to denote modules using contracts. That 3 letter word is an abbreviation of my project SelfHostBlocks where I use contracts already for my server.

The request from the Nextcloud module (shb.nextcloud.backup) is plugged in the request field of the provider module. The passthrough settings option is used to give options specific to the actual provider used.

One can see how all the details are hidden from the user here. But even better, one could create a second Restic instance backing up to an S3 bucket:

shb.restic.instances."nextcloud_s3" = {

request = config.shb.nextcloud.backup.request;

settings.repository = "s3://...";

};

Or one can use BorgBackup, assuming such a layer translating the files backup contract to then nixpkgs BorgBackup module exists, with:

shb.borgbackup.instances."nextcloud" = {

request = config.shb.nextcloud.backup.request;

settings.repository = "/srv/backups/borgbackups/nextcloud";

};

And backing up another service is also really obvious:

shb.restic.instances."vaultwarden" = {

request = config.shb.vaultwarden.backup.request;

settings.repository = "/srv/backups/restic/vaultwarden";

};

shb.restic.instances."vaultwarden_s3" = {

request = config.shb.vaultwarden.backup.request;

settings.repository = "s3://";

};

shb.borgbackup.instances."vaultwarden" = {

request = config.shb.vaultwarden.backup.request;

settings.repository = "/srv/backups/borgbackup/vaultwarden";

};

Having all modules use this files backup contract is a huge boost in usability and freedom for the end user. It also inverts the control and let the user choose how to backup something, without needing any work from the maintainers of the Nextcloud or other modules.

Stream Backup Contract

If one backs up files, one should be able to backup databases too. I’ll go quickly over this one and only highlight differences with the files backup contract.

I called this contract database backup contract in my project but stream backup contract is more adapted I think.

One could use the file backup contract to backup a database. Usually though, you can’t just backup the underlying files of the database, you need a dump. Creating that dump is expensive and takes disk space, so it’s usually better to rely on some streaming functionality.

The contract for this looks like so:

user = mkOption {

description = ''

Unix user doing the backups.

This should be an admin user having access to all databases.

'';

type = str;

example = "postgres";

};

backupName = mkOption {

description = "Name of the backup in the repository.";

type = str;

example = "postgresql.sql";

};

backupCmd = mkOption {

description = "Command that produces the database dump on stdout.";

type = str;

example = literalExpression ''

''${pkgs.postgresql}/bin/pg_dumpall | ''${pkgs.gzip}/bin/gzip --rsyncable

'';

};

restoreCmd = mkOption {

description = "Command that reads the database dump on stdin and restores the database.";

type = str;

example = literalExpression ''

''${pkgs.gzip}/bin/gunzip | ''${pkgs.postgresql}/bin/psql postgres

'';

};

The Postgres module would be a requester here, providing a databasebackup option defined like so:

databasebackup = lib.mkOption {

description = ''

Backup configuration.

'';

default = {};

type = lib.types.submodule {

options = contracts.databasebackup.mkRequester {

user = "postgres";

backupName = "postgres.sql";

backupCmd = ''

${pkgs.postgresql}/bin/pg_dumpall | ${pkgs.gzip}/bin/gzip --rsyncable

'';

restoreCmd = ''

${pkgs.gzip}/bin/gunzip | ${pkgs.postgresql}/bin/psql postgres

'';

};

};

};

The Restic module - the provider module - would have an attrsOf option taking in this request. The code for that can be found here.

Finally, on the end user side, using this looks like so:

shb.restic.databases."postgres" = {

request = config.shb.postgresql.databasebackup.request;

settings = // ...

};

Notice a pattern? ![]()

Secrets Contract

Until now, we didn’t do much with the result set by the provider module. Let’s define a contract where the result is used.

We’ll define a contract for providing secrets.

Contract

On the requester side, the contract allows to define the following options:

options = {

mode = mkOption {

description = "Mode of the secret file.";

type = str;

};

owner = mkOption {

description = "Linux user owning the secret file.";

type = str;

};

group = mkOption {

description = "Linux group owning the secret file.";

type = str;

};

restartUnits = mkOption {

description = "Systemd units to restart after the secret is updated.";

type = listOf str;

};

};

On the provider side, the contract just defines the resulting path where the secret will be located:

options = {

path = mkOption {

type = lib.types.path;

description = ''

Path to the file containing the secret generated out of band.

This path will exist after deploying to a target host,

it is not available through the nix store.

'';

};

};

Requester Side

Nextcloud needs a password for the admin user. It defines the adminPass option like so:

adminPass = lib.mkOption {

description = "Nextcloud admin password.";

type = lib.types.submodule {

options = contracts.secret.mkRequester {

mode = "0400";

owner = "nextcloud";

restartUnits = [ "phpfpm-nextcloud.service" ];

};

};

};

Then, in the config section, it uses the secret like so:

services.nextcloud.config.adminpassFile = cfg.adminPass.result.path;

Provider Side

A first possible provider is Sops.

options.shb.sops = {

secret = mkOption {

description = "Secret following the [secret contract](./contracts-secret.html).";

default = {};

type = attrsOf (submodule ({ name, options, ... }: {

options = contracts.secret.mkProvider {

settings = mkOption {

description = ''

Settings specific to the Sops provider.

This is a passthrough option to set [sops-nix options](https://github.com/Mic92/sops-nix/blob/24d89184adf76d7ccc99e659dc5f3838efb5ee32/modules/sops/default.nix).

Note though that the `mode`, `owner`, `group`, and `restartUnits`

are managed by the [shb.sops.secret.<name>.request](#blocks-sops-options-shb.sops.secret._name_.request) option.

'';

type = attrsOf anything;

default = {};

};

resultCfg = {

path = "/run/secrets/${name}";

pathText = "/run/secrets/<name>";

};

};

}));

};

};

The settings field is really passthrough here as it’s an attrsOf anything. TBH I was lazy and just let the upstream sops module defined the options.

The resultCfg sets the path where the secret will be located.

The config part is pretty simple:

config = {

sops.secrets = let

mkSecret = n: secretCfg: secretCfg.request // secretCfg.settings;

in mapAttrs mkSecret cfg.secret;

};

End User Side

The plumbing now must be done in both ways. First, the requester side to define how to generate the secret:

shb.sops.secret."nextcloud/adminpass".request =

config.shb.nextcloud.adminPass.request;

Then, the result side must be given back to the requester module:

shb.nextcloud.adminPass.result =

config.shb.sops.secret."nextcloud/adminpass".result;

This double-sided plumbing is a bit annoying but the following doesn’t work:

shb.nextcloud.adminPass =

config.shb.sops.secret."nextcloud/adminpass";

Hardcoded Secret

In all the NixOS tests involving secrets, I saw the usage of pkgs.writeText to generate a file and the path to that file was given to the option needing it. This has one major flaw, this doesn’t ensure that the file is generated with correct permissions. In fact, dealing with that is currently left as an exercise to the end user.

Thanks to contracts though, testing the permissions become easy. We can create a new provider that wraps pkgs.writeText and sets the permissions accordingly. The full module is here:

{ config, lib, pkgs, ... }:

let

cfg = config.shb.hardcodedsecret;

contracts = pkgs.callPackage ../contracts {};

inherit (lib) mapAttrs' mkOption nameValuePair;

inherit (lib.types) attrsOf nullOr str submodule;

inherit (pkgs) writeText;

in

{

options.shb.hardcodedsecret = mkOption {

default = {};

description = ''

Hardcoded secrets. These should only be used in tests.

'';

example = lib.literalExpression ''

{

mySecret = {

request = {

user = "me";

mode = "0400";

restartUnits = [ "myservice.service" ];

};

settings.content = "My Secret";

};

}

'';

type = attrsOf (submodule ({ name, ... }: {

options = contracts.secret.mkProvider {

settings = mkOption {

description = ''

Settings specific to the hardcoded secret module.

Give either `content` or `source`.

'';

type = submodule {

options = {

content = mkOption {

type = nullOr str;

description = ''

Content of the secret as a string.

This will be stored in the nix store and should only be used for testing or maybe in dev.

'';

default = null;

};

source = mkOption {

type = nullOr str;

description = ''

Source of the content of the secret as a path in the nix store.

'';

default = null;

};

};

};

};

resultCfg = {

path = "/run/hardcodedsecrets/hardcodedsecret_${name}";

};

};

}));

};

config = {

system.activationScripts = mapAttrs' (n: cfg':

let

source = if cfg'.settings.source != null

then cfg'.settings.source

else writeText "hardcodedsecret_${n}_content" cfg'.settings.content;

in

nameValuePair "hardcodedsecret_${n}" ''

mkdir -p "$(dirname "${cfg'.result.path}")"

touch "${cfg'.result.path}"

chmod ${cfg'.request.mode} "${cfg'.result.path}"

chown ${cfg'.request.owner}:${cfg'.request.group} "${cfg'.result.path}"

cp ${source} "${cfg'.result.path}"

''

) cfg;

};

}

Now, in a NixOS test, whenever a secret must be given, we can use this provider to test that the permissions are accurate:

shb.vaultwarden = {

databasePassword.result = config.shb.hardcodedsecret.passphrase.result;

};

shb.hardcodedsecret.passphrase = {

request = config.shb.vaultwarden.databasePassword.request;

settings.content = "PassPhrase";

};

Generic Tests

Speaking of tests, we didn’t yet talk about how to enforce a provider is acting as the contract expects it. This is done with generic NixOS tests. They are generic because they are a function defining the test script and expecting to be given the provider module to test.

For file backup contract, the generic test is located here. The interesting bits are that the setting options for the request part of the contract is hardcoded. This ensures all contracts work the same and we can’t easily cheat.

The test script is pretty classic: it creates some files, backs them up, deletes them, restores them and then asserts they’re identical as the original files. One important aspect is that to start the backup and restore the files, we use the systemd service and restore script provided by the provider.

We must then instantiate this generic test for every provider implementing the contract. It is done for Restic here. Btw, I don’t like that I hardcode the username to be root and then something else. I’d love some randomly generated name here, but we don’t have that AFAIK.

There are also generic tests for the secrets contract and instantiation for the hardcoded secret provider. Same for the stream backup contract and the Postgres instantiation.

Tour of the Code

The contract library, containing the machinery, is in /modules/contracts/default.nix. In the same folder are locate all contracts. The generic tests, as well as documentation for each contract are located in a subfolder with the name of the contract.

Tests for the contracts are located in test/contracts.

Drawbacks

Discovering which provider support which contract can be hard. There should be a generated index in the documentation.

Not sure about other drawbacks, but I’m curious what the community thinks.

Alternatives

I’m not sure there are alternatives. Tweaks in the implementation or interface, sure, but I never saw an alternative after the 2 years I’ve been working on this.

Prior Art

Same here, I’ve never seen anyone talk about this or projects tackling this in the NixOS ecosystem.

In the software world in general, it definitely exists already. The design uses structural typing which I know is used in Python with the name duck typing.

In Kubernetes, I heard there is a reverse proxy operator that is essentially a contract but I’m not familiar with the ecosytem.

Not sure it’s fair to categorize this as prior art, but I gave two talks about this. One at NixCon Pasadena 2024 and the other at NixCon Berlin 2024.

Unresolved questions

There are things I’d like to do better. If anything, the plumbing the end user needs to do is a bit ugly. You can see my full config here and judge for yourself.

Also, I’m sure there are still unknown unknowns which will be discovered as I (we?) define new contracts and providers.

Future work

There is already a path for upstreaming the backup contract. I think this one is the best to start with because very few modules, if any, provide such a feature. Adding this contract thus adds a lot of value to the NixOS ecosystem and doesn’t require adding backwards incompatible changes, lowering the friction to adoption.

In parallel, adding more contracts is crucial. Those I already see useful, in no particular order, are:

- Setting up a database

- Reverse Proxy

- LDAP

- SSO

- Mountpoint? (Think ZFS dataset or actualy mountpoint - it’s a directory provided by something)

Parting Notes

I want to insist on what this RFC’s essence is. What I want to get across is that we need more decoupling in NixOS. The structural typing is less important, it’s what I chose to implement this and I think it’s a pretty good fit with the NixOS module system. What’s even less important is the shape of the contracts themselves. I can see multiple overlapping, subset or superset contracts existing in nixpkgs without any issues. For example, there could be a file backup contract without pre/post script hooks and one without. Both can live in nixpkgs and evolve separately or together.

Thanks for reading! I’m eager to get feedback on this.

Cheers,

ibizaman