In my eyes, it would be the right solution to the wrong problem. If we are finding ourselves reinventing state democracy from first principles, I think this is a clear sign to take a step back and reevaluate. At the core, what we want is: 1) functional governance 2) some mechanism to keep it aligned with and representing “the community” 3) minimize the time and energy spent voting instead of coding. If our current approach fails at 3 because the voting process is too tedious and complicated, I don’t know if “let’s shift work off the voters by centralizing it and doing a bunch more work elsewhere” is a desirable solution. (Also, I’d argue that we are currently failing to various degrees at 1 and 2 as well)

…vendor lockin-free solutions to fundamental challenges like provably correct relocatability and late binding, dynamic and incremental builds, efficient store sharing with minimum trust, or economical per-app vm isolation, instead of “foobar: 1.23 -> 1.24” which happens to be some 90% of our commits. For this we mostly just need moneys for maintainers, without them having to ask for permission and approval (from the SC, from the Stichting, or from Third Party Sponsors), and some minimum structure to keep the total quantity of conflict bounded. All this imaginary stuff like “governance” and “representation”, or “legitimacy” is quite secondary to these, and often potentially contrary.

approval voting (Gabriella)

Ranking is fun at least because we get heatmaps with “100 voters put X as last/second to last choice”

Ideally we want to channel the energy from forum flames to voting, but make low-effort expression of preferences also possible so that voting trades off with flames but not coding.

I am the author of that blogpost above.

Ragebait headline aside, in reality that is a post about my frustration with the difficulty of voting in the election. I too am uncomfortable about needing to reach for an LLM to help me summarize the candidates and rank them according to my own personal values.

I will also add as well that the blog post understates how much verification I did afterwards, and I have continued with checking candidates since then out of curiosity. I stand by my votes and it is an ordering that I would have ended up with regardless of method. If anything I suspect that using that methodology was more thorough than I would have been able to do manually.

I don’t need to spend more than an hour voting in a real election, it’s weird that I should be expected to spend more than that for an OSS project.

(Also maybe this discussion should move to some other thread?)

I think it’s a powder keg to be honest. Not that I wouldn’t want to trust them to do it, it just feels like a much safer default to outsource it completely to consensus if possible.

but one can answer other questions

hm. couldn’t our status quo of ‘candidates may prioritize answering questions with more upvote emoji reactions if they like’ sort of handle this?

Interesting, where do you live?

Electing the National Council of Switzerland easily takes an hour for me when I do it properly by taking the smartvote questionnaire and then compare the results against the candidates.

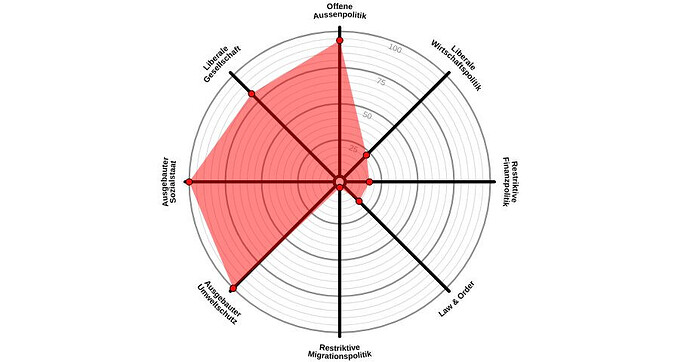

Which is actually something that I find quite useful when voting and it gives you more pretty graphs ![]()

Transitive closure of 75% agreement about question equivalence, in a low-turnout poll, is not really a consensus of project contributors! There will be some poetic justice in the selection of questions being more complicated than the election of the SC, though.

An indication of expected number of questions to answer might be useful, though.

Well, one of the election results is willingness of people to brainstorm UI…

And you have the bonus of trusting smartvote and having professional political class (some people first articulating their policy views literally in the election statements doesn’t make it easier)

One thing that would simplify things but can’t be done on OpaVote (and the number of proportional-ballot-friendly voting platforms with a track record is limited) is if I could say «we need 5 winners, rank at least 5 candidates, or more if you expect many of your first choices to be elimintated». It mattered that a single-candidate ballot is not valid, but OpaVote has not settings between «empty ballot is valid» and «must rank them all», the latter being a huge overkill for proportional election.

isn’t setting «empty ballot is valid» fine?

i think traditionally in STV one would indeed have the choice of how many of their preferences to rank. only ranked options would be deemed preferred.

intuitively, that kind of makes sense i guess.

OpaVote does not allow corrections, and zero-choice and one-choice ballots are easy to submit mistakenly. This was a pure vague-feeling choice between the options on the table.

Note that ranking N candidates actually provides less information than scoring candidates on a scale from 1 to N (and the latter is also easier on the voters).

In other words, if you have N candidates, the number of bits of information a voter provides by ranking them is:

log₂(N!) ≈ N log₂ N - (log₂ e) N (by Stirling’s approximation)

… whereas the number of bits of information a voter provides by scoring them from 1 to N is:

log₂(N^N) = N log₂ N

… which is more information (log₂(N^N) > log₂(N!)).

This latter approach (scoring each candidate from 1 to N, and the candidates with the highest cumulative score across all voters win) is known as score voting or range voting. It provides more information with less cognitive burden on the voters (because voters don’t need to totally order the candidates; they can score two candidates the same).

Not allowing to rank the candidates the same is a case of OpaVote UI suboptimality, there I agree.

The horrible truth about scoring versus ranking is the amount of projection going on: scoring works a bit differently based on how granular it is between approval voting and affectively-continuous scoring (I dunno, 0 to 1000000) — and scoring at different granularities and ranking with and without indisintguishable clusters are all different situations.

For some people like me low-granularity scoring is painful due to threshold effects, high-granularity scoring is a risk of balancing the ratios of differences, ranking is doable and ranking-with-equality would be the best. (How I know I dislike scoring: I teach, I need to mark tests, I know which parts are the annoying ones)

For some people and some topics approval voting matches how they think about the situation.

But yes, if someone knows anything about track record of any hosted scored voting solutions allowing to get anonymous ballots out, that would give more usable options to the next EC! (Tallying is reproducible so could be done separately, but some testing of the tallying tools in advance could also be useful)

That’s a nice idea, but as others have explained, I think it’s important to be aware that both the formulation and the selection of questions to include is very political.

One possible solution would be to have less centralization. Let whoever wants select questions, and don’t have one canonical set of questions. Let the candidates answer the questions they want. Perhaps provide a tool to both select the questions and compare the answers that these can plug into.

Yet if someone is able to assign numerical scores to the candidates, sorting the candidates by that score to get a ranking is trivial.

The nonlinear part of the process is sorting 24 numbers. If someone finds that too tedious, I promise to write a program to do it.

You’re missing the point of being able to give multiple candidates the same score.

That would be nice to improve, but for now I was working around that by sort -R on the same-score groups. (and I actually think it’s OK, but I’ve done lots of probability and statistics in the past)

«Proper way of coin-flip-based decision making» when sorting basically yields that automatically (you do all comparison by flipping a coin, and whenever you feel disappointment in the outcome, you turn the coin over and use the updated result of the coinflip).

(Of course, this only streamlines «do I have any opinion here» part, not the normally longer «what do I think about each candidate’s position» part)

I did a very basic analysis(warning: I haven’t double-checked my code, but it looks correct to me) of the ballots, which can be found at @pyrox.dev/ballot-understander at main · tangled. My findings is that by simple weighted results, 3/5 of the new SC members would have been different(voting in niklaskorz,pluiedev, and leona-ya instead of JulienMalka, philiptaron, and tomberek). I’m not going to make any value judgements, but this is simply a different way of ranking candidates that some may think more accurately represents their own beliefs of how the election should have ranked voters. If you have found issues with my code, please let me know on tangled instead of this thread, please.

I’d caution anyone against taking what-if scenarios with this year’s ballots too seriously. I know I for one would have voted differently if a different tallying method were in use.

@pyrox From what I can tell, your code just averages the rank of every candidate among all voters? That is certainly a way of doing it, but it doesn’t lead to a representative body.

Here’s an example show-casing the problem (if I make a mistake, I’m sure @7c6f434c will correct me though ![]() )

)

Imagine there are 7 community factions and 14 candidates, with 7 candidates each representing a faction perfectly, while the 7 others are unknowns.

Now everybody ranks the candidate of their faction at the top, while ranking the other 6 factions candidates at the bottom, and the unknowns at random inbetween.

With a proportional representation tally, you end up with the 7 perfect representatives by design. But with an average rank tally, you’ll get the 7 unknowns elected, because their average score is ~7, while everybody’s representative will have a lower average score of 1/7*14 + 6/7*3.5 = 5.