Whether Nixpkgs scales is a hot topic and I want to further discuss this topic with respect to the Python package set.

Earlier I posted a graph showing a steady increase in size of the package set. In 4 years time it increased from ~800 expressions to ~2500. Maintaining the package set takes a lot of effort because they’re (semi-)manually maintained expressions. For why they’re not automated, see an RFC in development.

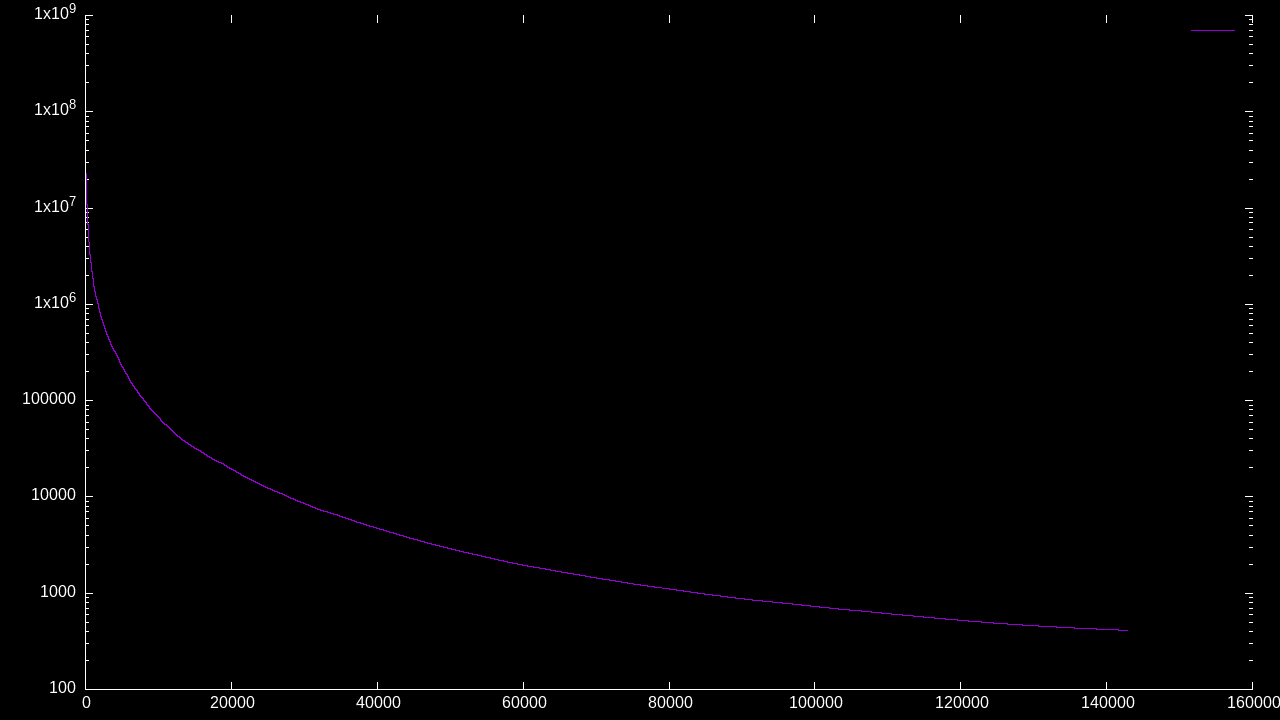

Leaf packages are not much of an issue as they are easy to review and cannot cause much breakage. However, as we get more of these, the size of the core set of packages grows as well. Updating any of these core packages typically causes breakage elsewhere typically resulting in chains of updates/fixes needed. For these reasons, even if ofborg says <100 rebuilds are needed, one can be pretty sure that it is going to break reverse dependencies.

This adds significantly to the maintenance burden and the question is now how to manage this. It has been suggested to limit the scope of the package set, but to exactly what then? If we limit the scope, how would users then get their expressions? Clearly, if we go in this direction, we need a way to extend the package set and compose it.

Even though such a way is not really available yet, I feel very much for not accepting any more new Python libraries unless it’s needed for a core package. This can give time to work on changes that are more important, like setting up a workflow that allows us to more easily test and integrate changes to the Python libraries.

I, personally, do not want to spend as much time on integrating updates and new packages as it’s grown into a big time sink. Maybe performing a batch upgrade in a joint effort once a quarter would be a solution. What are your views on this?