It appears to be that NixOS updates on servers and machines with reasonable fast Internet are much slower than the could be because cache.nixos.org packages are LZMA-compressed by default.

Repro

When I do a NixOS 22.05 → 22.11 update on my laptop, nix prints:

these 2656 paths will be fetched (7396.08 MiB download, 28788.08 MiB unpacked):

It proceeds to download at only ~100 Mbit/s. This is much slower than my connection would permit, since in many locations, 1 Gbit/s and even 10 Gbit/s are available.

Nevertheless, despite only pushing ~12 MB/s, the nix process consumes around 100% CPU (varying between 70% and 130% in htop).

Investigation

I suspect LZMA decompression is the culprit. Attaching to the nix process using sudo gdb -p [PID], I see:

Thread 5 (LWP 2294539 "nix-build"):

#0 0x00007feffc87422b in lzma_decode () from target:/nix/store/w3sdhqiazzp4iy40wc2g85mv0grg1cx0-xz-5.2.7/lib/liblzma.so.5

Click to expand full `gdb` thread output

(gdb) thread apply all bt

Thread 5 (LWP 2294539 "nix-build"):

#0 0x00007feffc87422b in lzma_decode () from target:/nix/store/w3sdhqiazzp4iy40wc2g85mv0grg1cx0-xz-5.2.7/lib/liblzma.so.5

#1 0x00007feffc875edd in lzma2_decode () from target:/nix/store/w3sdhqiazzp4iy40wc2g85mv0grg1cx0-xz-5.2.7/lib/liblzma.so.5

#2 0x00007feffc86d666 in decode_buffer () from target:/nix/store/w3sdhqiazzp4iy40wc2g85mv0grg1cx0-xz-5.2.7/lib/liblzma.so.5

#3 0x00007feffc868009 in block_decode () from target:/nix/store/w3sdhqiazzp4iy40wc2g85mv0grg1cx0-xz-5.2.7/lib/liblzma.so.5

#4 0x00007feffc869b70 in stream_decode () from target:/nix/store/w3sdhqiazzp4iy40wc2g85mv0grg1cx0-xz-5.2.7/lib/liblzma.so.5

#5 0x00007feffc860be3 in lzma_code () from target:/nix/store/w3sdhqiazzp4iy40wc2g85mv0grg1cx0-xz-5.2.7/lib/liblzma.so.5

#6 0x00007feffcecbd3d in xz_filter_read () from target:/nix/store/x83nrgbl489c90nnrg84dsmwpmy11cv5-libarchive-3.6.1-lib/lib/libarchive.so.13

#7 0x00007feffcec1856 in __archive_read_filter_ahead () from target:/nix/store/x83nrgbl489c90nnrg84dsmwpmy11cv5-libarchive-3.6.1-lib/lib/libarchive.so.13

#8 0x00007feffceef6f0 in archive_read_format_raw_read_data () from target:/nix/store/x83nrgbl489c90nnrg84dsmwpmy11cv5-libarchive-3.6.1-lib/lib/libarchive.so.13

#9 0x00007feffcec1090 in archive_read_data () from target:/nix/store/x83nrgbl489c90nnrg84dsmwpmy11cv5-libarchive-3.6.1-lib/lib/libarchive.so.13

#10 0x00007feffe12d240 in nix::ArchiveDecompressionSource::read(char*, unsigned long) () from target:/nix/store/1qxf5i4na4a4cdykhxki2wyal82kl0zb-nix-2.11.0/lib/libnixutil.so

#11 0x00007feffe164b29 in nix::Source::drainInto(nix::Sink&) () from target:/nix/store/1qxf5i4na4a4cdykhxki2wyal82kl0zb-nix-2.11.0/lib/libnixutil.so

#12 0x00007feffe12d18e in std::_Function_handler<void (nix::Source&), nix::makeDecompressionSink(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, nix::Sink&)::{lambda(nix::Source&)#1}>::_M_invoke(std::_Any_data const&, nix::Source&) () from target:/nix/store/1qxf5i4na4a4cdykhxki2wyal82kl0zb-nix-2.11.0/lib/libnixutil.so

#13 0x00007feffe16ff9f in void boost::context::detail::fiber_entry<boost::context::detail::fiber_record<boost::context::fiber, nix::VirtualStackAllocator, boost::coroutines2::detail::push_coroutine<bool>::control_block::control_block<nix::VirtualStackAllocator, nix::sourceToSink(std::function<void (nix::Source&)>)::SourceToSink::operator()(std::basic_string_view<char, std::char_traits<char> >)::{lambda(boost::coroutines2::detail::pull_coroutine<bool>&)#1}>(boost::context::preallocated, nix::VirtualStackAllocator&&, nix::sourceToSink(std::function<void (nix::Source&)>)::SourceToSink::operator()(std::basic_string_view<char, std::char_traits<char> >)::{lambda(boost::coroutines2::detail::pull_coroutine<bool>&)#1}&&)::{lambda(boost::context::fiber&&)#1}> >(boost::context::detail::transfer_t) [clone .lto_priv.0] () from target:/nix/store/1qxf5i4na4a4cdykhxki2wyal82kl0zb-nix-2.11.0/lib/libnixutil.so

#14 0x00007feffdb2018f in make_fcontext () from target:/nix/store/1qxf5i4na4a4cdykhxki2wyal82kl0zb-nix-2.11.0/lib/libboost_context.so.1.79.0

#15 0x0000000000000000 in ?? ()

...

Meanwhile checking in htop, this thread is indeed the one with high CPU usage:

nix-build <nixpkgs/nixos> --no-out-link -A system -I nixpkgs=/etc/nixos/nixpkgs

PID△USER PRI NI VIRT RES SHR S CPU% MEM% DISK R/W TIME+ Command

2294539 root 20 0 2165M 1138M 15792 R 67.8 2.4 32.37 M/s 0:04.59 │ │ │ ├─ nix-build <nixpkgs/nixos> --no-out-link -A

(Seeing threads – gdb LPWs in htop – requires setting F2 → Display options → [ ] Hide userland process threads.)

LZMA decoding is slow

From the above, I conclude that LZMA decoding of downloaded binary packages takes 70% at 100 Mbit/s download speed, so downloading at 1 Gbit/s or 10 Gbit/s transfer speeds is impossible due to the decompression bottlenecked.

On NixOS machines with fast Internet, this makes updates take 10x or more longer than necessary.

ZSTD binary caches?

The most obvious solution seems to be to use zstd instead of LZMA. Would that be a solution?

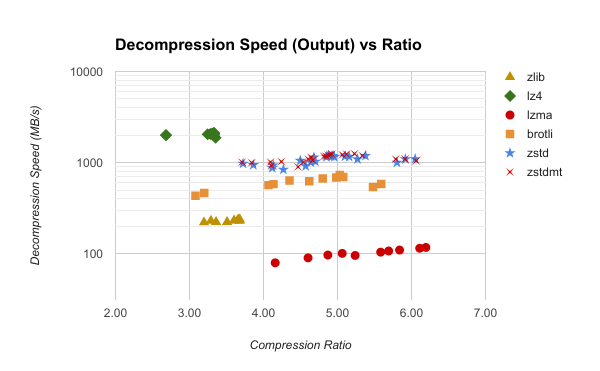

zstd decompresses 10x faster than LZMA ccording to https://gregoryszorc.com/blog/2017/03/07/better-compression-with-zstandard/

This article is now already 4 years old, and more decompression improvements have been made to zstd since then, see CHANGELOG, so the factor might be even larger by now.

Nix already supports zstd

ZSTD support for nix as added in April 2021 and released with nix >= 2.4:

- Support zstd compression for binary caches · Issue #2255 · NixOS/nix · GitHub

- Use libarchive for all decompression (except brotli) by yorickvP · Pull Request #3333 · NixOS/nix · GitHub

Multi-threaded compression and compression level controls was added as well:

What other distros do

Other distributions already did this switch (feel free to edit in, or mention in replies, further ones you know about), in time order:

- Fedora: Switched to zstd for

.rpms since Fedora 31, released October 2019. (source) - Arch Linux: Switched from

xz(which is LZMA) tozstdin December 2019. (source)Recompressing all packages to zstd with our options yields a total ~0.8% increase in package size on all of our packages combined, but the decompression time for all packages saw a ~1300% speedup.

- Ubuntu: Switched to zstd for

.debs since Ubuntu 21.10. (source)