I’ve been at this for months now, and tonight alone for hours and counting.

Hardware involved is: an LG Gram 17 laptop w/ Intel 620 integrated graphics. eGPU is a Razer Core X with an AMD RX 570. External display is a 4k TV via the GPU’s Displayport.

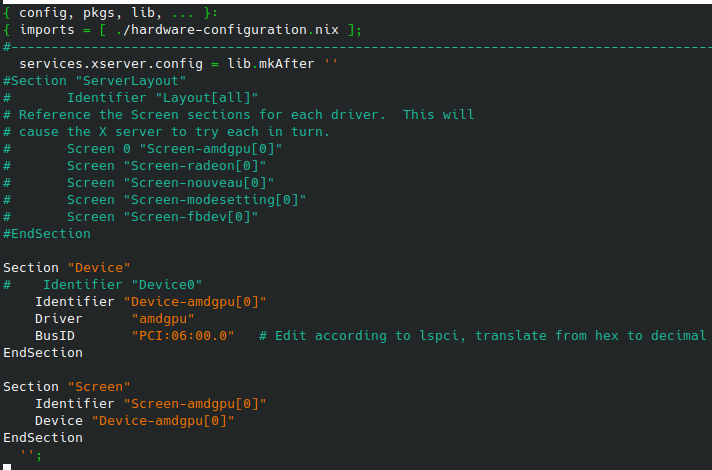

I think maybe the problem lies in the X configuration, but I’ve been messing with the services.xserver.config option, adding new sections and what not but at this point I’m stumped. If anyone can help me get this display on it’d be extremely appreciated.

This is how far I’ve gotten:

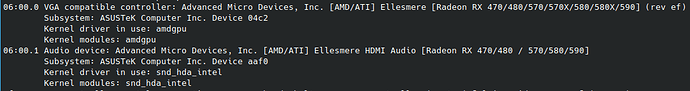

hardware.bolt.enabled, the GPU is authorized. Shows up fine under lspci -k:

(this is after booting with Thunderbolt 3 cable already plugged in)

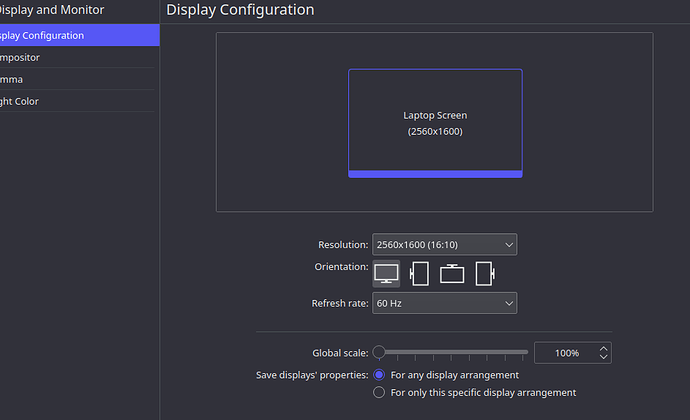

However, no displays besides the internal laptop display:

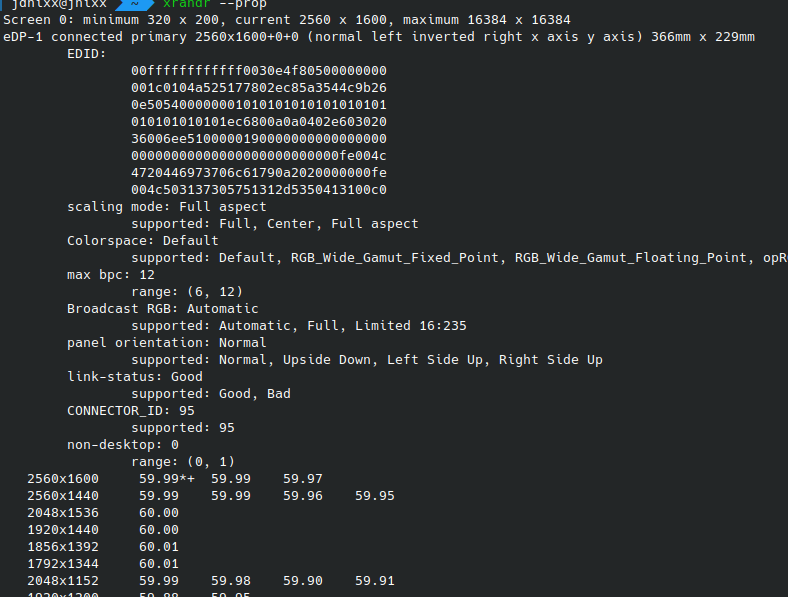

Let’s check out xrandr --prop. There’s the internal display on eDP-1:

(other disconnected outputs include DP-1, HDMI-1, DP-2, HDMI-2)

and xrandr --listproviders shows 2 available providers, the former being the default internal graphics, but it appears the latter isn’t using the correct amdgpu driver, it’s still using modesetting:

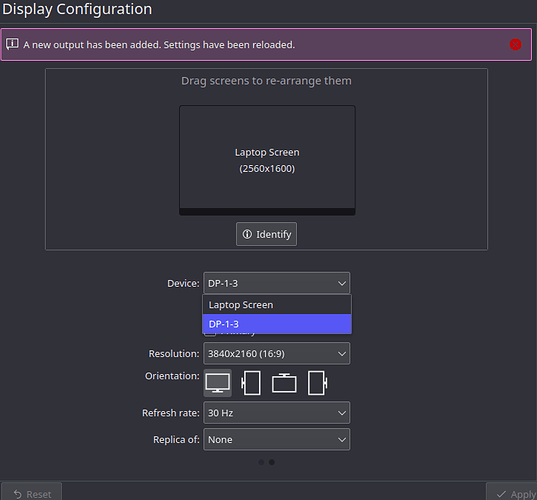

Now at this point if I just ignore that problem and try setting the provider to the 2nd one with

xrandr --setprovideroutputsource 0x841 0x47

…it DOES actually makes the connected TV finally show up in system settings:

(under the new output DP-1-3, for some odd reason…)

However when I check the “Enable” box and Apply, nothing happens. If I close & reopen Display Settings, the Enable box is then just frustratingly unchecked again. And if I log out/reboot, everything is reset back to the beginning of course.

I had some success manually adding the PCI bus address of the GPU to the Device and Screen sections of the Xorg config, as well as tweaking Screen to Screen 0 under the ServerLayout section (per the Archwiki’s instructions). Which after a reboot miraculously made the 2nd provider show the correct “Radeon RX 570 Series @ pci:0000:06:00.0” name and id: 0xee instead of 0x841; but without the ServerLayout stuff which I have currently commented-out as you see below, it’s now showing both providers using modesetting again. Furthermore, during when it was showing the correct provider, enabling the display in system settings didn’t even work regardless, it just did the same behavior of absolutely nothing. And I think I still had to do a xrandr --setprovider to get the display to show up in settings then as well.

Meanwhile, the Ubuntu 20.10 live ISO works to display on the TV through the eGPU straight out of the box. I’m completely stumped, can anyone give me an idea of where I might be going wrong here?