I’ve been battling with cuda on nixos for a few months now and almost have a working setup.

Courtesy of this flake:

{

description = "Python 3.11 development environment";

outputs = { self, nixpkgs }:

let

system = "x86_64-linux";

pkgs = import nixpkgs {

inherit system;

config.allowUnfree = true;

};

in {

devShells.${system}.default = (pkgs.buildFHSEnv {

name = "nvidia-fuck-you";

targetPkgs = pkgs: (with pkgs; [

linuxPackages.nvidia_x11

libGLU libGL

xorg.libXi xorg.libXmu freeglut

xorg.libXext xorg.libX11 xorg.libXv xorg.libXrandr zlib

ncurses5 stdenv.cc binutils

ffmpeg

# I daily drive the fish shell

# you can remove this, the default is bash

zsh

# Micromamba does the real legwork

micromamba

]);

profile = ''

export LD_LIBRARY_PATH="${pkgs.linuxPackages.nvidia_x11}/lib"

export CUDA_PATH="${pkgs.cudatoolkit}"

export EXTRA_LDFLAGS="-L/lib -L${pkgs.linuxPackages.nvidia_x11}/lib"

export EXTRA_CCFLAGS="-I/usr/include"

"/home/safri/.nix/cuda/env.sh"

# Initialize micromamba shell

eval "$(micromamba shell hook --shell zsh)"

# Activate micromamba environment for PyTorch with CUDA

micromamba activate pytorch-cuda

'';

# again, you can remove this if you like bash

runScript = "zsh";

}).env;

};

}

and a micromamba environment:

micromamba env create \

-n pytorch-cuda \

anaconda::cudatoolkit \

anaconda::cudnn \

"anaconda::pytorch=*=*cuda*"

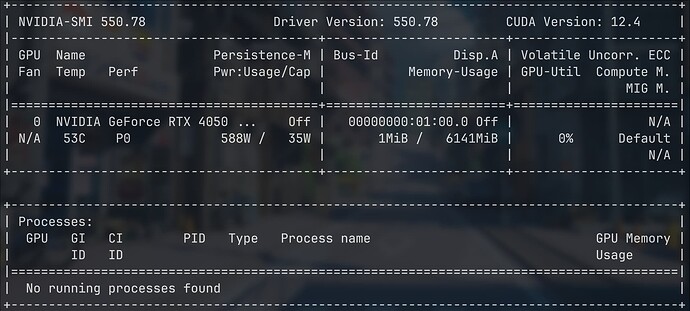

I now have my gpu actually working with openai-whisper. The only problem is that whisper now fails to work with any of the larger models (despite it working just fine when I was on Arch linux with the same computer), it simply exits with:

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 254.00 MiB. GPU

The gpu also doesn’t seem to be working when whisper isn’t running even though I have set:

services.xserver.videoDrivers = [ "nvidia" ];

My NVIDIA config is:

services.xserver.videoDrivers = [ "nvidia" ];

hardware= {

opengl.enable = true;

nvidia = {

open = false;

powerManagement.enable = false;

nvidiaSettings = true;

modesetting.enable = false;

package = config.boot.kernelPackages.nvidiaPackages.stable;

prime = {

offload.enable = false;

nvidiaBusId = "PCI:1:0:0";

intelBusId = "PCI:0:2:0";

};

};

};

Just in case it’s relevant:

boot.initrd.availableKernelModules = [

"xhci_pci"

"thunderbolt"

"nvme"

"usbhid"

"usb_storage"

"sd_mod"

];

boot.initrd.kernelModules = [ ];

boot.kernelModules = [ "kvm-intel" ];

boot.extraModulePackages = [ ];

Is in my hardware-configuration.nix

While in the venv, its clear that the cuda is working:

>>> import torch

>>> print(torch.cuda.is_available())

True

It’s using cuda version 11.8

My main system is running a wayland compositor (hyprland) on NixOS 24.05

Can somebody please tell me why whisper is failing with any model above medium despite my gpu being enough to run the higher models?

Any help would be appreciated.