I’ve been playing around with Nix for a short while and - despite the complexity - I really like the overall approach. I can see how it’s a great solution in a variety of contexts. What I’m trying to work out is whether it can be a good solution in an IoT context. I’ll say a few words about my requirements/priorities, and observations so far. Would be great to get input from the community on whether I’m barking up the right tree.

Context and requirements:

- We have a few hundred devices running in the field, used for data acquisition. The hardware is based on Raspberry Pi or similar, and they all run some flavor/version of Debian as a base OS.

- We also have our own applications running on the devices, which handle the above-mentioned data acquisition, and other relevant tasks.

- We occasionally need to push new application updates. Though we don’t generally upgrade the underlying OS after deployment.

- The main requirements are that:

- Unattended updates should be performed atomically. More generally: things should never end up in a broken state.

- Updating the application should (within reason) be independent of the underlying OS. This generally means bundling dependencies.

The above requirements basically rule out using apt/dpkg. Currently we are deploying the applications as Snaps, though after ~5 years of using this approach, I’m not totally satisfied with the result - and am wondering if there’s a better way for future deployments - so have been looking into the viability of using Nix for such a use case.

Before I continue, I should mention another couple of priorities:

- Many of the devices are in remote locations, with patchy network access. So the download size of updates should be kept to a minimum. [despite many promises, this is actually one weak point of Snaps; both due to design decisions as well as bugs that persist]

- While storage is not extremely tight, it is also not abundant. Most devices have 4-8 GB flash memory.

- Maybe it goes without saying, but building anything from source on the Raspberry Pis is a no-no; all applications should be available as pre-built binaries.

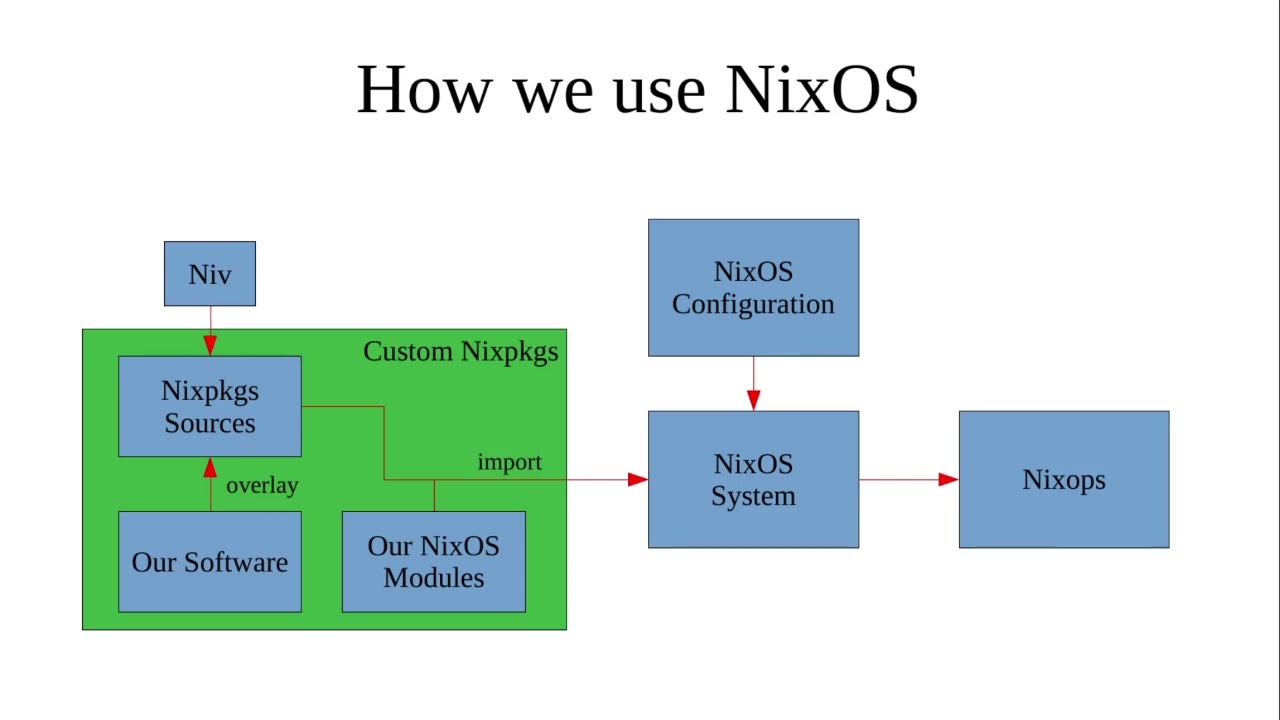

I was attracted to Nix since it caters to the main requirements: atomic updates, and pinned dependencies. To be clear, I’m not thinking of going full NixOS (yet), but using Nix for application software deployment and updates.

After a bit of playing around, I have some concerns around download and storage size. I’d like to get a sense of whether these concerns are well-founded. I.e. whether Nix can be made to work well in a constrained environment - or it’s just not the right tool for this kind of job.

Specifically, I got things set up using @tonyfinn’s excellent Nix from First Principles: Flake Edition guide. To simplify/minimize things, I also removed all flake registries, except for a pinned github:NixOS/nixpkgs/22.11. Then installing just a couple of basic packages (nix-tree, mosquitto, python310) causes /nix/store to grow to ~1GB in size, which seemed somewhat alarming. Some observations:

- The two largest directories namely,

/nix/store/nkhjmzkf9hky9h34yrfy0cgyd9pbh03v-source(293MB), and/nix/store/wwk2ad9jvg8r1a8lyg0x8kmmg53n97sq-nixpkgs(146MB) appear to both be downloads ofgithub:NixOS/nixpkgs- the former pinned at the22.11tag (but somehow double the size), and the latter a more recent commit - Installing

mosquitto(normally a tiny piece of software) pulls in 200MB+ of dependencies. A lot of that issystemd(fair enough - I wouldn’t expect Nix to use what’s already there), but another large part is stuff like Perl, which is clearly unrelated (it’s pulled in through a dubious dependency oflibwebsocketsonopenssl-dev). - I have two versions of

glibc: 2.34-210 and 2.35-163. The former is used bynix(v 2.12) and the latter by the other packages in the 22.11 release. (similar for other libraries likesqlite)

Some of the above points strike me as…suboptimal? Maybe I’m missing something basic, which would reduce the level of redundancy of what’s getting downloaded and stored? By the way, I’ve enabled/run store optimization, wiped the profile history, and run garbage collection.

Either way, minimizing the storage required for an initial install is not the top priority - we can ship devices with whatever firmware we want (as long as it fits in flash). It’s more important to minimize download size during subsequent upgrades. And this is something I’m less clear about…

- Presumably if we pin everything to a given NixOS/nixpkgs release (e.g. 22.11), and we build our applications as a set of flakes that link to the exact library/package versions in that release, we’re good to go. I.e., whenever our applications get updated, they are the only thing that gets downloaded, and no external dependencies?

- How does this work in the context of security updates? I guess if any nixpkgs flakes are truly pinned to a tag/commit, then there will be no updates? Conversely, allowing for updates will require a more general re-install of most things (since basic building blocks like

glibcare likely to have changed)?

To be honest I’m not even 100% sure what exactly I should be evaluating here. I guess I’m just concerned that an unforeseen situation could introduce the need for a large-scale download/rebuild/reinstall.

But more generally, could Nix serve a resource-constrained IoT use case well? Or would this be a world of pain?